Coolcoder360's Devlog/updates thread

-

Well I’ve been trying to do Cereal, but here’s kind of the issue, I follow the tutorials but it doesn’t work for me, I can’t get it to compile because it’s for whatever reason not able to find the serialization functions I’ve written, unless I specialize each of the functions as I mentioned before, where I re-write the same thing for every combination of input and output that I want to do.

To me that means I’d rather just write the serialization stuff myself to do Json, XMl, Binary, whatever I want to do rather than try to get Cereal to work the way its supposed to.

Additionally, I found this issue which basically says that Cereal doesn’t work right in a recent version of clang, but they were able to work around it by uncommenting some asserts. I use g++ with cmake, but I figured what the heck, I’ll go uncomment the asserts that are causing failures too. That let’s it compile! but then I just get this for the json output:

1 { 2 "value0": "NameComponent", 3 "value1": {}, 4 "value2": "TransformComponent", 5 "value3": {}, 6 "value4": "EntityEnd" ~And what I didn’t even notice until now, I don’t even have the closing

}in there. So in my mind, Cereal is out of the competition now, if you can get it to work with your compiler, great, but for me, I don’t think having XMl, Json, and Binary support if I want to write the same thing 6 times is enough for me to use it.So I’m planning to go back to the picojson, however this time i’m going to try to not do it the dumb dumb way, and I’ll write serialization methods for types, that way I can have less struggle of writing everything out the long way. This does mean I’m still re-writing my serialization stuff I had before, even though I kept what I had before, but I think my hope is that this means that I can more easily use the serialization again elsewhere if needed, so I could serialize things like settings, configuration, input mappings, etc hopefully more easily in the future.

So right now the plan is to basically make a

SerDesabstract class, which I would then use as a base for having aJsonSerDesand other such serialization classes, so I could potentially serialize to multiple formats that way, and then each such class would have a.save(type)function implemented for each type that needs to be serialized. This does mean writing all the serialization for each type manually, but hopefully that should be minimal effort, and then hopefully I can find a way to have Json, Binary, etc saving/loading happen by just changing which class is used, and then calling the same set of methods on it.This does add some complications in that Json is key/value based, and binary and other formats are not, so there may be some confusion/confusing stuff going on there to where it might not be as clear to read the Json as it would be if I did it the way I did before, but I think this will be least effort in terms of being able to write the

SceneLoaderto be able to save/load to each format without any extra effort, and as long as saving it out happens in the same way as loading it in does, maybe that does not matter. I could also likely allow usingstd::map<std::string, value>type deals to allow saving things that already have key/value pairs, perhaps there is a way I can denote the key/string in a binary format as well without much issues. Something yet to be determined I suppose, or maybe I could use BSON or protobuf for binary save/load instead of a custom format.On top of that, I will probably also need to get bindings together to use it from Lua, either the serialization data directly, or just have a way for Lua to save chunks of memory somehow, that way scripts can save things if they need. Perhaps I could even find a pre-canned Json library for Lua that I could include, that would allow for generating lua saves from Lua, and then just use some API to save it to disk.

Next steps

I’m going to keep including these next steps sections even if I keep rehashing the same crap every time. it helps me keep my duckies in a row in terms of what is next, and it’s nice to be able to see how close I am to having something usable when I’m slogging through saving/loading nonsense.

Next steps are to finish up the save/load junk, and then I’ll basically be done with the first 0.0.1 milestone. Next up is miniaudio audio playback, likely using low level API so I can implement my own stuff on top. not that I need to spend the time implementing my own stuff on top…

other than that, multithreaded loading of things I think is high desire, currently my whole update thread stops to load things, which means large time spend lagging out. Render thread keeps going but no processing happens there, so it basically looks frozen and is frozen. Probably going to need to look into the future/promise types that are C++11/whatever for threading. Then basically just not render/use those assets until they are loaded in. Yes this means that assets that are not loaded at the time they are needed will be invisible and pop in, I might need to implement some kind of preloading system to allow loading crap on a loading screen or with a loading bar, or background pre-loading stuff for nearby areas to load them before they are visible or needed.

Test games/testing the engine

After the audio stuff is done, I think it’ll be a good idea to implement a simple toy game to prove out that the lua bindings work, and everything works. I think my plan for that is likely to be a breakout clone, just because I’ve done those before, the logic is minimal, and the physics can be fairly simple.

I also technically have bullet physics already “integrated” but I haven’t actually tested that, or implemented the lua bindings to create rigidbody components for entities, so that as well as save/load for the rigidbody component will be needed. Then a different test game will be needed, likely i’ll go for some sort of sandbox-y type thing or something where you walk a player character around and can throw things and bump into things. Just to make sure that the physics of things all works as expected.

Once those basic tests are complete, I think it can be time to actually try to go implement a game. the engine would be mostly in a usable state hopefully by that point, maybe with some UI work to be done, I’m still not decided on if I want to finish implementing my own UI elements as originally planned, or to proceed with using the nuklear library, although I’m not sure nuklear is set up to be used with an entity/component system like I have.

I’ve gotten a few ideas/plans together for what I would want my first game or games to be in engine, but of course I keep switching around what I would want to do, between a basic FPS type campaign game, to a horror game, to something else entirely. I think for starting out I would want minimal effort dialog and/or just no dialog options/responses, and no quest systems, that would let me prove out and thoroughly test the basic features before moving on to implementing further elements that would not be able to be thoroughly tested until later. Likely i would add an achievement system before a branching dialog and quest systems, so the achievement system could be tacked on to an existing campaign style FPS game I think.

Or alternatively, I could just jump around between prototypes, slowly building up a library of 3d assets and scripts that could be re-used in other projects. hard to say.

-

Saving/loading progress

Saving and loading is going great, now that I’m not using Cereal, but I’m also doing it in a sane fashion.

Basically I have an abstract class like this:

13 class SerDes { 14 public: 15 virtual void save(int)=0; 16 virtual void save(float)=0; 17 virtual void save(double)=0; 18 //virtual void save(uint8_t); 19 //virtual void save(uint16_t); 20 virtual void save(std::string)=0;Which basically has save and load functions for each type I need to save or load. Then I extend it with a class for a specific file/save type to save/load, so right now I’m working on a JsonSerDes class which implements all of those functions.

This does mean that if I want to add saving for a type, I will need to add it in the abstract class, and then add it in every single sub class to avoid compilation errors, however this seems to be the best way to do it from a usability perspective for now. better than piling all the picojson crap all into my scene loading/saving for every single thing.

Additionally, now when I need to save a complex type that is a struct or class full of other types, I can just add a save/load pair for it, but then call the save/load functions for each of the types that it needs saved, which means it is now relatively non-painful.

So far I have all the things except for MaterialComponent saving working but untested, I had some pre-existing tech debt around saving/loading materials that I need to re-wrap my head around how I used to want it to work, and to see if I want to change it to work differently now.

One I get all the saving done/polished from this big master

SerDesclass, then I should be able to go into the sceneloader and basically make theSerDesclass, and then just go through the entity, and save the components with aSerDes.save(Comp)type deal. making sure to save the hierarchies properly of course.Currently when I use the scene saving command it outputs this:

{"0":"NameComponent","1":"MeshEntity","10":1,"11":"EntityEnd","2":"TransformComponent","3":0,"4":0,"5":0,"6":0,"7":0,"8":0,"9":0}So we’re almost there, need to test it out more, add saving for more components, and then test the loading and we’ll see where we get.

Art break

I also took a break from coding for a few days this week and did some art things in Blender. Basically I went through Google maps street view looking for things to model, and made a spreadsheet of all the city street/residential street things to model.

I also modeled a few of those items, and then made this render in blender with them, along with some quick/sloppy buildings and pavement, an image pulled from google images in the background, and a car pulled from blendkit:

All of the concrete barriers, traffic light, and construction cones, barrels, and bike racks are modeled for my street props stuff.

I don’t know that me starting with modeling street/city props means I will be making a game set in a city or street any time soon, but browsing maps street view seemed like a good way to see what different areas look like and figure out what props belong there or are needed. So rather than try to browse pinterest or google images for reference, it was easier to just “travel” to the location via street view instead to see what different things look like.

I was also reminded that Google maps actually lets you view inside some buildings with the street view type features, so I could potentially use that as a resource for how to layout some buildings, restaurants, or other attractions. Since it’s not like I would leave the house to go do any of that IRL. Okay I do and have still gone outside and like, gone to restaurants I’m not entirely a hermit, but I’m not about to go take in a 360 camera to take panoramas everywhere I go. Pretty sure that would draw some serious looks.

Summary/Next steps

I think that after the save/load works, I need to spend some time testing/verifying things. I’ve gone and done a bunch of changes, but haven’t fully tested all of it out yet. So I’ll be testing the save/load of course, but also need to test/verify the model saving/loading, since that is something I haven’t yet, I think I tested 3d rendering with just a mesh generated via Lua, I’d like to be able to test out that model loading of different file types works as expected/desired, and if not need to finish that up, then I think I’ll be in business for doing some brief game prototyping.

Additionally, I’ll need to go test out and implement the physics stuff as well, and the save/load for the physics data. Maybe some sort of physics sandbox game is in the future? or perhaps some sort of street racing/street crashing game. that could be fun.

Also the miniaudio sound stuff is planned in the near future, so perhaps some music making stuff should be done too, I haven’t brushed up on playing piano or percussion in like 7-8 years, I’ve been thinking of getting a MIDI keyboard of some kind to practice up again, but I might just satisfy myself with using musescore or LMMS for now, just with the keyboard or with clicking on the piano roll. issue is getting inspiration to make music that way is much harder because you can’t really just jam out on a piano roll.

-

Been trying to maintain momentum on my engine since I find that if I’m enjoying it, it’s easier to get more stuff done, so I’m also going to try to maintain momentum by continuing to write these things on a weekly or more often schedule when I’m excited to write stuff, because it helps me maintain that momentum and excitement for what I’m working on.

Engine progress

Save/load

Engine progress! Basically save/loading is working and tested with basic entities and components, aside from the whole saving/loading meshes and materials. I tested by saving out an entity, removing that entity, and then loading it back, and then saving it again. if the two save files matches, i considered it a pass. They matched!

This leads to kind of the next little difficulty though, saving/loading models. So right now my rendering renders Meshes, in

MeshComponents that are attached to each entity that has a mesh. This is great an all, and a Mesh will have a material set for it by callingmesh.setMaterial(material)on it. The issue comes though, in that when you load a model, the model can have multiple meshes. So then there is the question of how does one handle that?My previous plan was to load a model, save it in a

ModelComponentand then use the meshes from that to populate child entities withMeshComponents filled out for each of the meshes in the model. I thought this would be good because then rendering just cares about the mesh components and that’s that. The problem here comes in with a few things:- I don’t have anything to handle having children transformations be based on the parent transformation, that means if a model needs to be moved, with all of its pieces, then each mesh piece needs to be moved individually.

- If a model doesn’t load a material properly to each mesh every time, there is no good way to save the material modifications needed to work

- If I want to change a mesh material after the model is loaded, I cannot do that and then save it. This is especially problematic because I see myself wanting to be able to change the model on the fly or change the materials used with the model on the fly.

So how I plan to deal with this, I think my best bet is to add

ModelComponentto the list of things the renderer basically cares about, so I would pull the meshes from there, and then figure out how to save the model with changes to the materials, etc, and/or figure out how to save just the mesh data out, and then not worry about “models” other than for importing things, then each mesh would be saved out with its own material, or maybe it would be saved out sans material and I would just use aMaterialComponentfor each entity that has aMeshComponentso that I can change materials on a per entity basis.Obviously I’m still working out the hiccups, but that’s my thought process so far.

GUI

The other item is that the amount of commands I would have to type out to load up a material and model are getting quite long, so I figure its time I get looking into the GUI stuff at least to have a GUI for editing.

My latest decision was to use Nuklear for UI things. Nuklear is basically a single header file GUI library, the main hurdles for using it seem to be implementing whatever rendering stuff it needs, and then also figuring out how to deal with an immediate mode UI rendering, but making all the UI stuff be configurable from Lua code.

I think in my mind it makes most sense for the Lua code to configure/lay out the UI stuff in some data format, and then just have C++ go parse the data structure or layout or whatever it is, and then call the nuklear methods based on that.

So right now I’m browsing the 1k lines of code example for Nuklear, and then going to try to figure out how to implement the necessary rendering stuff for it to render, then i’ll probably try to figure out how to store/communicate what the UI is that is needed by Lua into the C++ engine.

Arts

Artwork is still happening, I have a few more models since the last post but I don’t have a big image made including the new ones, so no new render today I think, I’ve been posting renders from Blender in the discord, and will hopefully get the model stuff in and then be able to post in engine screenshots instead of blender renders.

I’ve also been browsing street view in various countries as well to try to get a feel for what different cities in different places feel like and look like, may try my hand at making some city shops or buildings at some point, but I would like to make them as modular as possible so that I can go in later and reuse the pieces for different things.

Next steps

Finish off the model loading/saving/materials nonsense and then UI. then audio. and making sure my issue tracker is up to date.

After that, likely making sure the physics engine data goes into the save/load stuff (it is not saveable or loadable yet) and then testing that out. Already have a design doc somewhat started on a racing game, may share that at some point in the future, but as usual my game ideas snowball like crazy with scope creep so will probably need to pare it down at some point to a demo-able piece compared to the MVP and the full wishlist.

-

Engine progress

Save/Load

Alright, been a bit of a slowdown here on the engine progress in terms of save/load. basically I was confused on how to do the whole mesh/model saving/loading stuff, which was all fine and dandy, so I decided to just save out the meshes to binary and then load them back. I already had a

importMeshand anexportMeshbuilt out at some point in the now distant past.So I went, made a quick

MeshLoaderto do the save/load on the meshes, then decided I’d write some lua bindings to let me test it before I try to chunk it into the rest of the scene save/load stuff. And it segfaults massively and with unhelpful stacktraces, and valgrind gives some serious output crap.So let’s take a look at this code I wrote eons past and then see why I’m going to just dump it and use my

SerDestype stuff for this instead.Here are the functions called to do the binary serialization of types that I have defined in a handily named

Util.h:88 inline std::vector<uint8_t> glmquat_to_uint8vec(glm::quat quaternion){ 89 std::vector<uint8_t> array; 90 uint8_t* flx=reinterpret_cast<uint8_t*>(&quaternion.x); 91 uint8_t* fly=reinterpret_cast<uint8_t*>(&quaternion.y); 92 uint8_t* flz=reinterpret_cast<uint8_t*>(&quaternion.z); 93 uint8_t* flw=reinterpret_cast<uint8_t*>(&quaternion.w); 94 95 for(int i=0;i<sizeof(float);i++){ 96 array.push_back(flx[i]); 97 } 98 for(int i=0;i<sizeof(float);i++){ 99 array.push_back(fly[i]); 100 } 101 for(int i=0;i<sizeof(float);i++){ 102 array.push_back(flz[i]); 103 } 104 for(int i=0;i<sizeof(float);i++){ 105 array.push_back(flw[i]); 106 } 107 return array; 108 } 109 110 inline uint32_t uint8arr_to_uint32(uint8_t** arr){ 111 //AABBCCDD where AA is uppermost byte, DD is lowermost, in order read 112 uint8_t upperupper=(*arr)[0]; 113 (*arr)++; 114 uint8_t lowerupper=(*arr)[0]; 115 (*arr)++; 116 uint8_t upperlower=(*arr)[0]; 117 (*arr)++; 118 uint8_t lowerlower=(*arr)[0]; 119 (*arr)++; 120 uint32_t value=(upperupper<<24)+(lowerupper<<16)+(upperlower<<8)+(lowerlower); 121 return value; 122 } 123 124 inline uint16_t uint8arr_to_uint16(uint8_t** arr){ 125 //AABBCCDD where AA is uppermost byte, DD is lowermost, in order read 126 uint8_t upperlower=(*arr)[0]; 127 (*arr)++; 128 uint8_t lowerlower=(*arr)[0]; 129 (*arr)++; 130 uint16_t value=(upperlower<<8)+(lowerlower); 131 return value; 132 }Boy that looks fun, and I still wish it worked, I mean saving out works just loading it in finished the whole loading part, and then malloc gives an error when I try to free the data. Some googling says its related to reading past the allotted memory, and yeah I suspect that might be the case, but I sure as heck can’t find where that issue would be, but I’m not familiar with some of the . Anyway I’ll save trying to get that working in binary for when I implement a binary

SerDesif ever, for now I’m going to just swap it all out for myJsonSerDesstuff and be good to go. Plus who cares about endianness when it’s a json text file?Arts

Okay, been a bit slower on Blender the past week as well. Was trying to make some modular pieces for making buildings and such, but realized that I don’t really know how I want to go about doing that, not sure if I should like, make the pieces separate and then try to force them to fit into something I’m making, or if I should try to make something first and then cut it up into pieces somehow?

Never having made a modular building kit before of art, I guess I’m a little confused on how to start or go about making one. Also I found myself getting distracted with wanting to make procedural materials for the building pieces instead of making more pieces or just using textures from somewhere.

Definitely seeking input/advice on how you would make a modular kit to use to build various art assets, doesn’t have to be 3d or buildings specifically, but if you prefer to make it all as one art piece and then slice/dice it later, or if you prefer to make pieces and put them together later, and have some sort of reasoning behind it, that sounds great, would love to hear some input on how you do it and maybe there’s a new way or method I could try.

Right now I think I’m sort of stuck because for props its easy to pull out one specific small piece and then find reference for that item, for buildings or other complex structures its hard to get inspiration for what the modular pieces would be/look like, and I have some concerns about the pieces possibly not fitting together well if I try to cut it all up from a combined model first.

Next steps

I think next step is to rewrite my import/export Mesh with the

SerDesand then maybe I’ll get to the Nuklear stuff I’ve been ranting about the past post or two.Also been looking at houses, those darn things sell so fast here, all the things I looked at like 4 days ago are all already under contract and have all their offers, and they were all released onto the market like, that day or the day before.

-

Engine progress

Save/Load

Mesh Save/load

Well I had quite the run around with Mesh save/load involving valgrind and all sorts of wild debugging. For one, somehow I wound up with circular header includes or something, so I wound up having to forward declare some types just to get it to compile. Somehow that happened when trying to switch Mesh import/export to the

SerDessystem.After that I found myself in another predicament where everything compiled but it segfaulted, and gave a really really wild stacktrace in gdb. That was caused by a function not having a return when it should have returned

int. Returning int fixed that issue, and all was well. Need to figure out how to make that be a compile error with g++/gcc so that I don’t have that issue. I’ve know for a while that there are some functions that didnt’ have a return statement, but none of them thus far have caused an actual issue that I’m aware of, so possibly going and runningcppcheckor making that a compile error would be helpful to fix all of those possible bugs.To some extent the lua API is written so that it works fine if you don’t make mistakes when using it, if you do, expect wild segfaulting and nonsense.

in any case, Mesh loading works! was able to save a cube, and then load the same cube back and apply a material to it. Next up is material save/load

Material Save/load

Then after mesh save/loading, I need/want to be able to save/load the materials that were assigned to the meshes. So now I’m trying to do the same convert from my silly

uint8arr_to_glmvec3type stuff to the newSerDesstuff for my import/export material methods I made eons ago. Finding similar compilation errors as before, but now that I figured out the solutions for the Mesh, then it should be similar for material to fix them.The once Material save/load works, it should be possible for meshes to save their own materials out and then load them back. Then Next after that is Model save/load

Model Save/load

Okay model save/load has been having me flip flop on how I want to do it, because it’s hard to figure out how I want to do it if separately at all from the Mesh save/load.

This is partly because of 2 reasons:

- Models can consist of multiple meshes, Do I represent that as each individual mesh in each their own entity or do I combine it all into one entity or what?

- What if the material loading doesn’t work for the model, or I want to set individual materials for each mesh in the model, how do I save that, along with letting me load back the model again if the source model file changes?

- what if the material loaded from the model is fine for a few instances, but what if I want to go change the material on one or all of the meshes in a later entity using the same model? I can’t change the model because I would likely want the model to be able to be shared across multiple entities/meshes.

So what is the solution? I think the solution would likely be to just take models, and load them into a bunch of meshes, each with their own assigned material. and then just save/load the meshes as part of the scene, ignoring that they came from a model at all. This means that I would simply not care about the models at all in scene save/load, but instead I would just write some sort of logic in the Lua scripts that implement the editor to track tying the models to the meshes to allow reloading meshes when the source models have changed.

This may sound a bit like just putting off the whole issue until later, but I actually see it as having a few benefits. I mean in the shipping game would I really care/need to ship the model source files themselves? why wouldn’t I just save them out to my own format to load them from, so I can load faster/with fewer steps? In the final game you’re likely never going to need to reload the mesh from a source mesh file.

Additionally, if I do need the ability to do that, I just take whatever logic I implemented in the editor for doing that, and I can copy /paste it into the game logic lua scripts. So really no feature issues here, just make the lua scripts care about it.

Additionally, loading things through assimp requires stepping it through intermediary

aiMesh,aiMaterial, and ton of other types that I don’t use in my engine to render crap out, so really this will skip that whole middle step, granted this does mean that right now all models/meshes are saved/loaded with Json of all things, which is likely a larger file size than even a obj, but it loads directly into the formats I need, with all of the data that I need. That means that it’s likely the best for me right now, and will have less overhead of loading things from file, then looping through all the vertices to convert it to my own data structures, instead now it will just load from file, no extra steps. Later on I can implement Binary save/load and it’ll likely be smaller file sizes, and maybe faster loading because of that, but its still better imo than having overhead of converting between data structures after loading from file.UI

Back onto the topic of Nuklear and UI stuffs. Still haven’t gotten to implementation yet, trying to sort out the save/load first since UI isn’t technically in the 0.0.1 release, I just want to get going on it sooner so that I can have a UI for my editor stuff, which will make editing/creating things much faster than the current console commands, which means I could get that much closer to making a game in this engine.

I think I’ve sort of figured out how I want to do UI, basically the UI needs to be rendering by calling nuklear methods for each item/element of the UI, which means that somehow I need Lua to be able to do that. I think to make it makes the most sense to create some kind of UI manager which will take some sort of data structure or structures populated by the Lua, and then will basically iterate through them to call the Nuklear commands, and then the UI would be drawn via Nuklear’s drawing methods, which means that all Lua would have to do is basically set up the structure, say what Lua method to call when there is some input on the UI widgets, and then it goes into a entity or something somewhere which gets pulled into the RenderState and then rendered from there.

I will say this process of implementing Nuklear compared to if I used my own UI system does seem a bit more convoluted, because basically the steps are:

- generate data structure for the UI/menu in lua and stick it into a component or some shit to be saved/loaded as well as pulled in to be rendered

- in the Update Thread pull the UI data into the

RenderStateto be passed to the render thread - in render thread, convert the data structure into nuklear calls. Along the way add callbacks for UI input to the stack of tasks to happen in the update thread

- then render the nuklear crap also in the rendering thread.

This means that basically the render thread now has to not just render all the rendering crap, but also call the methods to populate the nuklear UI, as well as to render the Nuklear UI. Not sure if that’s more overhead than if I used my own system or not, since I would basically be going from data -> rendered right away. However Nuklear seems still like the best option for now, because of the sheer quantity of already implemented UI widgets and input boxes, along with it all being skinnable already. It just makes sense to use Nuklear for now and eat the overhead costs if any. Maybe if I get to profiling everything later and find out that it’s taking too long on just the nuklear calls and rendering in the render thread, maybe then I’ll change it.

But it also may be worth, instead of just doing the nuklear calls in the render thread, I could likely just save a nuklear context in the RenderState, and call all the Nuklear UI widget calls in the Update thread instead, and just pass the context to the render thread to do the actual rendering, so the UI population would happen on a different thread than the rendering. Hard to say, right now without many models, Rendering goes as fast as it wants, Update thread is tick limited, although I’ve noticed differences between Linux and Windows in what speed it claims to be going, on Windows it was going half the tick speed I told it to, so then I changed it to be 60 ticks a second on windows, and now it’s basically double that on Linux. Not sure that matters so much, I don’t think it should matter anyways.

Also I’ll likely want a way to configure the UI look from Lua. there are ways you can do different skinning, and apparently you can get a modified version of Nuklear to look like this:

If that’s not like, a regular ass game menu then I don’t know what is. So if I can make something that looks like that or works like that with Nuklear, then I think we’re golden. might need to do some hacky stuff to make it work with controller but obviously its been done before.

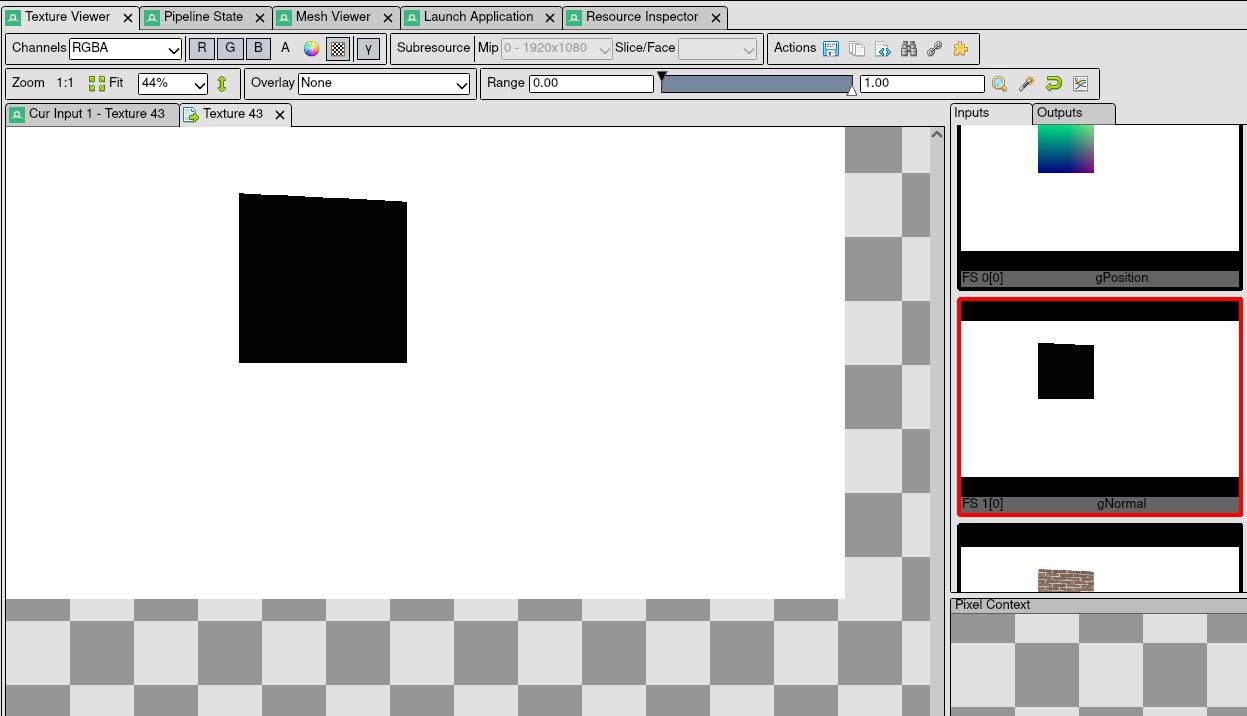

A Rendering Conundrum

So some time after I had the mesh save/load working, I noticed that I was no longer having the lighting work on the mesh I was testing with (the usual brick textured cube you see if you scroll wayyy back.) I’m pretty sure right when I tested the mesh save/load it was working, but now it isn’t.

So I set up renderdoc and took a capture of it to try to see what is wrong, and I found this when I looked at the normal texture used to compute lighting:

For those of you unfamiliar with what normals should look like when rendered to a texture, this is not it, there are somehow is no normal data for the mesh.

And of course, a little analysis later, and I found this in my git log:

- Mesh.setMaterial(mesh,material); - Mesh.GenerateNormals(mesh); + --Mesh.setMaterial(mesh,material); + --Mesh.GenerateNormals(mesh); + --Mesh.Save(mesh, "MESH_01.bin");So I commented out the line that generates the normals of the mesh, not sure if I saved it or not, so I’m uncommenting it, and going to save out the data, and see if the lighting works then. could be the saved mesh just got saved without normals.

EDIT: I did some more investigation, the save/load was working fine, but I hadn’t yet actually added any normals to a mesh before with the add normals command, I had only had the mesh generate them procedurally. the issue proved to be within my add normals and I found one in the add colors methods on my mesh.

Basically I had used the

.data()of astd::vector<glm::vec3>but I used it on the argument passed to the method, when I should have used the.data()call on the vector that was saved as a member of the mesh class. Problem solved!Next Steps

Next steps: wrap up testing the material save/load, then I think I’ll call it quits on model save/load for now until I get the editor commands/UI more figured out. maybe I’ll implement Lua bindings to be able to load meshes/materials from models, that will help me verify my whole Assimp loading stuff is working in this version. I tested that in a previous engine iteration years ago, so likely a good idea to test it with some actual models.

Then also, get going on the nuklear stuff, and also check up on my Quire issue tracking, and wrap up the 0.0.1 release, tag it, and bump the version. once material/mesh save/load is all tested, as far as I’m concerned that’s a wrap on 0.0.1!!!

-

A surprise break from engine progress

Wanted to discuss about some other things that came up yesterday that caused what could possibly be considered a disruption from engine progress.

I use Archlinux btw.

And I did an few updates yesterday and all this past week, then rebooted my machine yesterday, and the install broke. Wouldn’t even boot, on either my 5.15 kernel or my 4.16 kernel I keep as a backup. Got stuck on a waiting for save/load /dev/rfkill watchdog type message and had no login prompt or anything. I was able to later boot with the 4.xx kernel (and the 5.15 kernel too) with the

nomodesetkernel option specified in grub. That’s all fine and good, butnomodesetdisables the Kernel Modesetting, which is basically saying when the GPU driver would set up the screen display size, etc. Well, most drivers, the amdgpu driver I use definitely includeed, do not support the type of modesetting that would be done if you specify this option. So that means my system boots, but I cannot get the GPU driver to work properly.What this meant for my 5.15 kernel is different from my 4.xx kernel. 5.15 I compiled myself because that’s the only way to get a kernel to boot on my setup (see below) and it also was configured to use only the amdgpu driver for graphics, on boot, it does not show the boot logs you typically see at start when you have those enabled on linux. instead you just see some pixels at the top of the screen flickering and changing, since the display is showing memory used for something else. With

nomodesetit could “boot” and I would get put onto the login prompt, I know this only because I could see a pixel pattern that it settled to with some blinking pixels after about a regular boot time.

I could then even log in, and watch that pixel pattern change!So instead I booted to the 4.xx kernel, which did show the boot logs, and would spit me out to a login prompt I could see. logging in worked fine, but then if I wanted to run startx, it initially didn’t work with my config because the

amdgpudriver was specified in the config. So I remove the config and ranstartxfce4(I used the XFCE4 environment) and that did actually spit me out onto a GUI desktop once the config was removed. A 800x600 GUI. Because of the nomodeset, that was the best I could do.So this is a bit of a pain, and I decided its unworkable, so I did some testing yesterday to try to figure out what the issue could be, from checking some systemd and other configurations in

/etcas well as checking the logs of what was updated.I then decided its not worth trying to fix it, because of why I need to compile custom kernels anyways, that actually limits my ability to do any kind of distro hopping or anything like that.

Why I could’t run a non-custom Linux kernel

I got my desktop workstation as a refurb workstation from pcserverandparts. It came with two 2TB HDDs set up in the BIOS in a RAID 0 configuration. Once I got it I did not change that configuration, I put Windows 10 onto a USB stick and installed it, and then promptly installed games.

Then when trying to install dualboot linux onto it, I discovered something awful. I couldn’t for the life of me get Linux to boot from it for me, for the longest time.

The issue turned out to be that you need to have a very specific kernel setup, that I only once was able to completely build from scratch, using Gentoo’s genkernel tools. This consisted of a special

initramfsas well as a build of the kernel. This special build needed to have the kernel build to includemdadmnot as a module, but compiled right on in. it also needed some configuration i don’t remember anymore in the initramfs and could never successfully build again on archlinux (it started Gentoo, but I got tired of compiling everything on HDDs so I live swapped it to Arch by pacstrapping myself an arch install in a folder, and then copying files from that into the root of the OS while I was running that same OS. That feels both exhilarating and scary af at the same time, but super bad-ass when you pull it off and it works) So I was stuck with using an oldinitramfsfrom the 4.xx kernel, with the newer 5.15 kernel.This whole mess meant that basically I relied on the setup I had in that install and could not or did not want to change any of it. Until it conveniently broke and I said forget it all.

my solution

So I went and solved it. I backed up my stuff from both the windows partition and the linux partition, then I went into the BIOS, undid the RAID configuration. Then wiped it, and now I have installed debian onto it (I had it laying on a USB stick already from installing it onto a laptop) and I’m running the KDE plasma desktop environment.

I also set it up, so I have one of the 2TB drives is my /home, and the other is partitioned into a 30% sized partition for my root partition, and then a EFI and a swap space of course. This way I can create new partitions and then do some distro hopping, while still having access to my /home and files.

Conclusion

I’m a bit sad that my arch install of 3 years broke and I wasn’t able to or didn’t try to fix it properly, but I’m quite glad that it helped me get rid of that hindrance of the drives being in RAID, and it’s about time I got back to my distro hopping roots. I say even though I’m likely to not even switch away, I love Debian as a rock solid distro, and this is my first time running KDE on a machine that can handle it.

Who knows, maybe I’ll get going on some Linux From Scratch stuff and do that instead of Gentoo or Arch or any of that.

Now I just need to re=clone my game engine project and get back to it!

And maybe see if the VR works better or if steam and maybe lutris work better and I can run Epic games? -

Back onto the Game engine

Brief follow-up to last time on my desktop: It’s works so smoothly on Debian with KDE plasma, I’ve even been told that my time on Arch was what the stereotypical Linux user has to deal with, where things break or don’t work left, right, and center, but my time on Debian is what Linux is meant to be, stuff kind of just works out of the box (once you install things). With Debian I have steam working, games that didn’t quite run as well on my Arch setup now run perfectly with Debian. I have Epic Games launcher installed via Lutris and working, and most games there (except Cities Skylines, which I think also required extra setup on windows to get some 3rd party launcher) all seem to work out of the box with no issues.

Engine progress

So I got my engine checked out again, installed all the dependencies I forgot about needing to compile it, and now it compiles again, back on track.

Had an issue where my

JsonSerDeswas outputting some garbled stuff at the beginning of the json output, fixed that with astrdupand then afreeafter it was used.Next issue I’m having now I sort of foresaw. Basically Pico Json has limited facilities for how it will store numbers in json. I keep track of assets via what I call a

RUIDwhich is basically auint32_tbut picojson just stores things as doubles. I think this might be why I’m having issues, where basically I save out the RUIDs for assets to the save file, but then when loaded back in from the file the RUID is different. So I suspect I need to do a special case with handling ints and unsigned ints to be saved out instead of passing everything through to doubles. Not sure why the library just doesn’t already provide int save/load to json, but it might also be that one would expect a double to always work and have the same value (I kind of did, maybe some sort of precision thing?)RUIDs and File/asset loading

Wanted to also mention more about the RUIDs, i don’t remember ever really going on about that in depth, but I’ve been gruedorfing for nearly a whole year now (currently writing this part on Feb 1st, will be a year on Feb 9th), so its hard to remember.

Basically when I was designing how I wanted asset loading to work on my engine, I read a few blog posts somewhere (That I likely won’t be able to find again) about it. Basically I want to be able to create a zip, archive or something like that and be able to load things from there, but I still need a way to reference the asset files, even if the path they are in in the final build is not the same as during development.

So I decided on the RUID mechanism I use. Basically I take the string path that is where the file is while doing development, and then hash it to a uint32_t, and store that as the RUID. In my

FSManagerI make a map of all known hashes and their paths just for use during development. This means that during development, I can just give it a path, and it’ll compute a hash from there, and if it’s not loading from an archive, it’ll just use the path.Then later when I go to make a zip or other form of archive, I can likely make some sort of manifest file/entry table or something basically saying what offset in the archive the asset for each RUID is. So this way I can potentially even just have assets be saved on a server somewhere, and then have my

FSManagerhandle caching them, and loading them from server and the rest of the game engine just act likes normal, give it a RUID hash, it works find and uses the lookup table. Give it the path that was used for the asset during development? just compute the hash from there and do the lookup with the generated hash.This method is also nice because then when I’m writing a Lua script, I can reference assets directly with the path instead of needing to use some silly RUID reference or needing to write/have a tool to let me select the asset and use the reference. It’s fine for a script or something to ship in the final game using the asset path instead of RUID.

What’s Next?

I feel like I keep going on about all sorts of things I’d rather work on than debugging the save/load stuff, and it’s true, I’d much rather be shoving a UI system into this thing or implementing audio whatevers, but I also need the save/load to work, otherwise there’s not much point in having an editor UI, because that would just let me implement a bunch of stuff that can’t be saved. Plus I’m sure the UI and audio implementations will all have their own difficulties and their own problems to debug. That seems to be the neverending task of development.

One thing I think I will likely do later, is try to sort the issue tracking stuff I have better, to let me have the milestones be in smaller chunks, that could happen more quickly. Part of my difficulty with that though of course is that I like to sometimes take a break from the pain of the hard stuff, to go work on something fun, so some of the things I’m working on now weren’t originally in my first milestone. So I think part of it will be planning some fun things to do in each milestone, along with some of the less fun things, but of course there’s also the issue of things that were fun later becoming less fun when they have problems.

Also I think I’ll try to plan some time to go run cppcheck or other such tools on my engine, to try to make sure it has a solid base to build the future things onto. Otherwise I may find myself scratching my head on why things don’t work, and hopefully I won’t end up with stability issues for too long, I suspect some of the stability issues I’ve had in the past could be resolved with some serious valgrind work or some cppcheck stuff. it’s difficult to make things like this be completely bulletproof, but I’m sure if I keep running the tools and fixing parts here and there that it won’t be too large of a task to do a little bit each milestone

-

1 Year anniversary of Gruedorfing!

Huzzah, that’s right. I started this stuff on Feb 9th 2021, and now here we are at Feb 9th 2022 (not at time of writing, this might be posted early, I’m writing this on Feb 3rd because I already have things to mention that seem to go well with the 1 year anniversary thing)

Have I gotten a lot done? Well not as much as I would have planned/hoped when I started, I sort of hoped to have completed a few of my planned milestones last year. But I do have good news, I completed 0.0.1! sort of.

0.0.1 milestone complete!

Again, sort of. I didn’t bother finishing testing the scene save/load, I just fixed the errors I was having with my test case of testing material save/load which was to have a lua script manually save/load materials etc, I didn’t let the scene save it all and load it all, I likely have to add material saving still to my scene save/load.

But now I can go on to my next milestone of doing UI things! But I should also probably try to better define what the milestone will look like, the first milestone of 0.0.1 took me so long to wrap up I think I should definitely downscale my expectations for 0.0.2, just because I’ll likely also try to scope creep the next milestone too.

But before we go on to rant about 0.0.2 planning, let’s cover what we have implemented, and what is tested, so far:

- Lua script running, ticking

- Lua APIs to load/save meshes, materials, load textures, add/remove entities and components to entities, bind/map lua functions to input actions

- working input manager that is mostly tested (but with mouse input being broken due to using the wrong type of mouse input)

- PBR Deferred renderer with a forward pass that handles rendering text and will eventually handle rendering transparent/non-opaque objects (transparency/blending not yet implemented or tested)

- Only does direct lighting so far, no IBL, no GI

- 3d point lights do work, no other light types

- In engine versioning that should work with Jenkins

- (Tested, but I don’t trust Jenkins to not entirely break, need to also back up Jenkins configurations etc.)

- docker container which can build engine, working in Jenkins

- Scene loading/saving

- Generic Json serialization/deserialization implementation, can be drop in replaced with other types of serialization

- Material creation, save/load

- mesh save/load, creation from Lua

- Model loading (from files with Assimp) ported but not tested

- EnTT based Entity-Component system

- Hierarchy support added

- Multithreaded Render and Update loops

- Generic FSManager abstracting away all filesystem operations from the rest of the engine

- UTF-8 support for rendering text in Unicode for various languages

- and font loader for loading/rendering fonts

- Text input with my own font loading/input methods.

- used for a lua only console with commands

Wow that is a lot, definitely makes me feel better about how long it took to do all that. I have hopes that now that most of the framework is put together, like the rendering, EnTT, lua scripting, etc, that future features will hopefully be at least a little bit faster to implement, or at least I’ll have less time spent debugging the basic framework and more on the actual feature implementations themselves.

What’s in store for 0.0.2

So initially I had planned for audio to be in 0.0.2, but I think that’s a lot to do Audio, since I also want UI, etc etc. Basically this is the classic case of the product managers wanting everything done yesterday for free, but I’m both the product manager and the dev in this case, so the only person who loses here is me.

So I think here’s my initial plan for 0.0.2:

- UI support with Nuklear

- I think this is important because it means I can make the editor more usable than remembering console commands, combine that with having the scene save/load working at least a bit better, then it should mean I can make, save, and load back scenes in engine, which would be helpful for testing rendering in the future, both efficiency/benchmarking, as well as other tests.

- Add Lua API to load models from files into meshes and materials.

- This way it’s useful for the editor to be able to import more complex meshes instead of either having to manually define the vertices, faces, texcoords, etc.

- Fix Mouselook issue

- add keyboard movement to move around scenes in editor

Basically 0.0.2 will be the “Editor milestone” to get editing things to be a bit more useful, and easy. Get the UI stuff in, make editor UI, make editing more usable than having to accurately type a bunch of commands.

What else is next for the Engine milestones?

Well, based on how long 0.0.1 took and how much stuff was planned for it in my quire project for it, I think it makes sense to try to keep milestones to be smaller, with maybe 2-3 big tasks, and then 1-2 bug fixes/small tasks. This way milestones continue to be a small bite I can feasibly chew in a few months instead of a whole year.

With this logic in mind, I’m trying to basically have a theme for each of the next several milestones, since there are a lot of major chunks I still want to put in before I drop the 0.1.0 release, which I would call the “Barely works but has all the pieces together to make a game in” release.

So the next few releases/milestones are likely to be themed like this:

- 0.0.2 The Editor Milestone

- add everything necessary to make editing easier

- 0.0.3 The Physics Milestone

- add support for creating rigidbodies, kinematic bodies, and physics constraints between the bodies

- 0.0.4 The Audio Milestone

- add in the miniaudio stuff, need to figure out if I want to do low level API or high level Engine API, I might just do low level so I can handle controlling the audio data/assets my own way instead of using whatever their way is, but that means I would need to implement some things myself that they likely pre-can into it.

- 0.0.5 The Asset Packaging/exporting MileStone

- This is basically the zipping all the scripts, configs, assets needed for a game into a .pak/archive file to be loaded later.

Each milestone may have some extra nice to have features or bug fixes sprinkled in, but this means there are 1-2 big tasks for each milestone, and then some (hopefully) smaller tasks sprinkled in.

Those milestones also will get me most of the way through to 0.1.0 with the basics of what is needed in a game:

- UI

- Rendering/3d/scenes

- Audio

- Physics

So then making a game by that point would basically be to go into the editor and add entities for things/worlds, write and hook up lua scripts for crap, and then likely writing an init/setup lua script and then hooking it all together.

Only thing missing there is animation, but in all honestly, if I want to make my racing game idea, I don’t think I really need any animation to have physics cars flying around a track, you just need physics to knock shit around, and maybe you can cheat animations by having obtuse ticking/whatever in lua scripts to update stuff each tick. that would definitely get laggy af most likely but we’ll see. If I can chunk out a piece of sh-, I mean, A golden masterpiece game after my 0.0.5 milestone, I think that’ll help me verify that my engine works. skeletal animation and basic animation can all come later/after the fact. Heck controller support can be after the fact as far as I’m concerned, I’m not expecting this thing to be a masterpiece.

So that’s probably about as granular as I want to get with planning for now, There are some other things like translation support and such that I may want to add/move around but this is the basics of what I would need ot make a basic game, and as long as I don’t need anything animated, I could make a shooter, a marble roller/monkeyball game, or a racing game, or any number of other things. Maybe even a golf game, not that a golf game is the kind of game I necessarily think would be the most exciting to make, but it could also have some interesting value add done to it to add loops, and other things. and Golf It is somewhat a relaxing game, so it may make sense to try making a golf game, and it would likely be easier to make into a multiplayer game because people hardly would care about rubber banding in a golf game compared to a high stakes racing or FPS game.

UI System

Okay, now that I’ve laid out what the plan is, time to get into the specifics of the UI. Plan is to basically use Nuklear to do my UI, but nuklear works sort of like imgui, where you basically have to call nuklear functions every single frame for every single UI piece you want to render, that’s great and all but doesn’t really go well with Lua scripting, because I don’t want to have to call into a Lua Script just to get the UI stuff going, it seems like it would make more sense to have a data structure or something that is assembled in Lua, and then Lua only gets called when some input happens.

This is great but I realized that its a bit more complicated than I thought, for a few reasons.

- There’s more to a UI than just “Here’s an ordered list of all the widgets I want” because there’s layout involved. each row in Nuklear can have a different height, different number of columns, etc. and they all go into a window. and in the window you can have panels? and then you can have like, trees where there are children UI stuffs that can be hidden/expanded to see, but the same trees can also be used to display trees apparently? I think?

- It’s not easy to convey this all in something simple, I was hoping for just a window class to represent the UI window and then like, json or something for the rest, but it seems I’ll need some more classes to figure it all out since there are rows, with row height and number of columns, and crap like that.

- You have to call the nuklear commands for each widget to fill the context with what is there, and then you have to parse out some other nuklear commands for what to draw, or you can somehow get what you need to draw in a different format? trying to still figure out how I can render this crap out without tying it to being openGL only since I know my renderer is wayy to involved/connected to everything else right now and I don’t want to make that problem worse for when I want to add GLES 3 or Vulkan. (Yes I want to add at least one of those if not both, GLES 3 would get me mobile and web I believe, Vulkan would also get me mobile support and supposedly if you’re good it can be more efficient, but I believe that to rely on the skill of your implementation and not just from just throwing crap from GL to Vulkan, so I doubt I’d actually have Vulkan be faster)

So I’m not entirely sure yet how I want to do it, I likely will want to get the rendering working with just the windows, and then later I’ll go add in support for the widgets and such.

Likely I’ll end up having some sort of row/bucket type class/struct, and then have that contain various widgets that would be in that row, along with data for the row height, columns, etc.

I’ll also need to figure out how to configure the style/skinning as well, but I think that’s a later feature. I think once I get started I’ll be able to break it into smaller chunks, create more stories in quire for pieces, and maybe rearrange some effort to later milestones, etc.

-

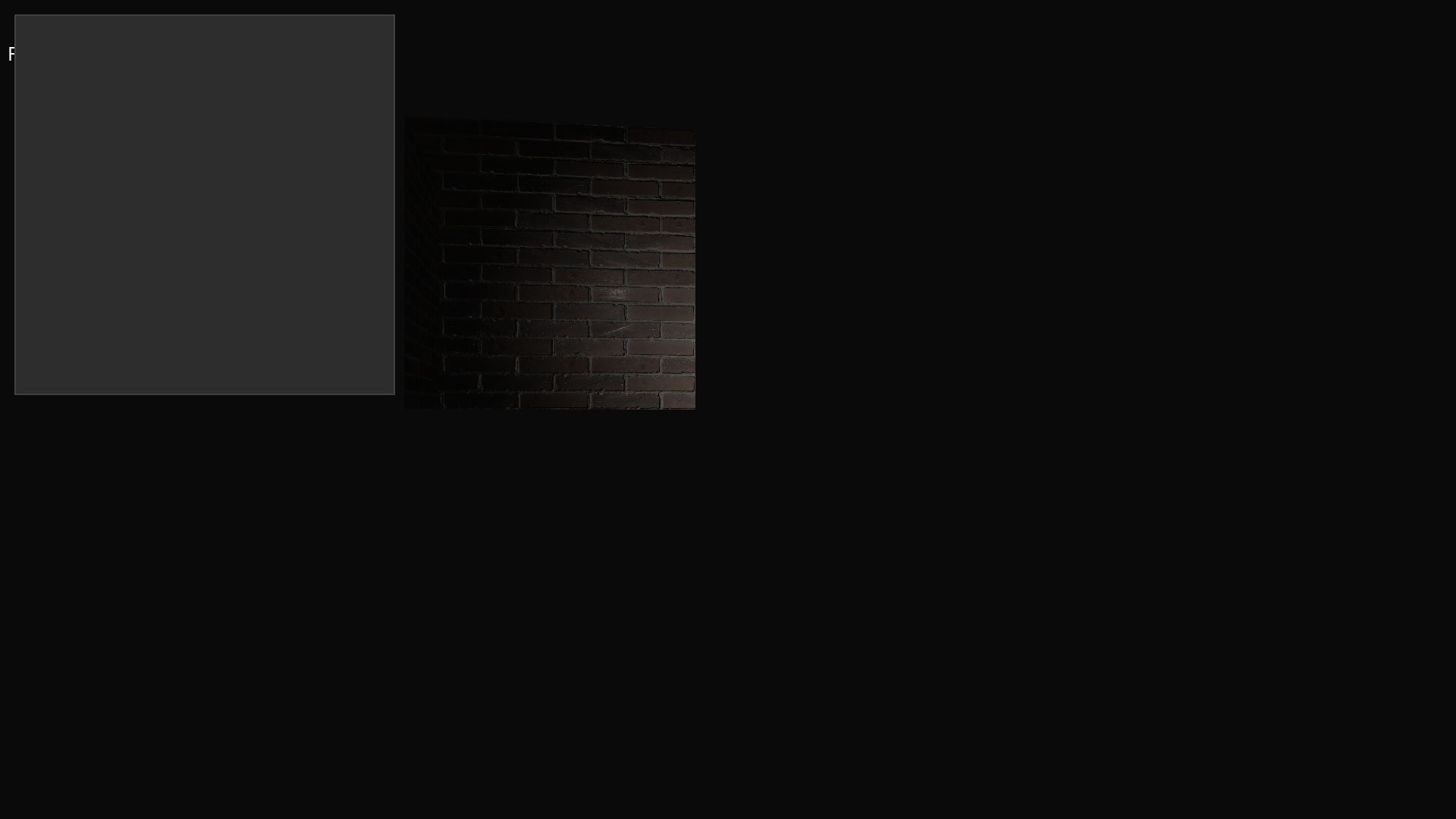

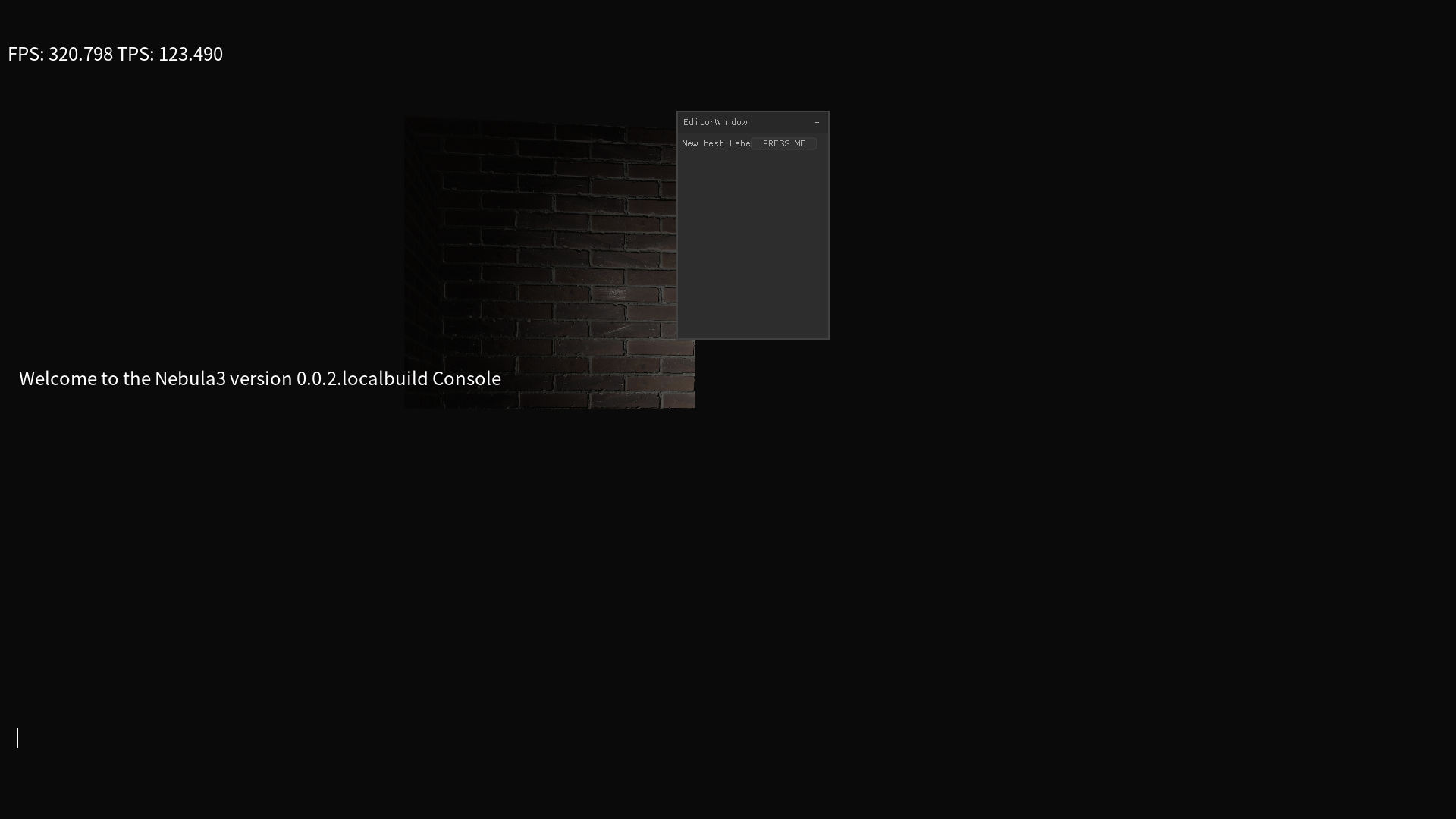

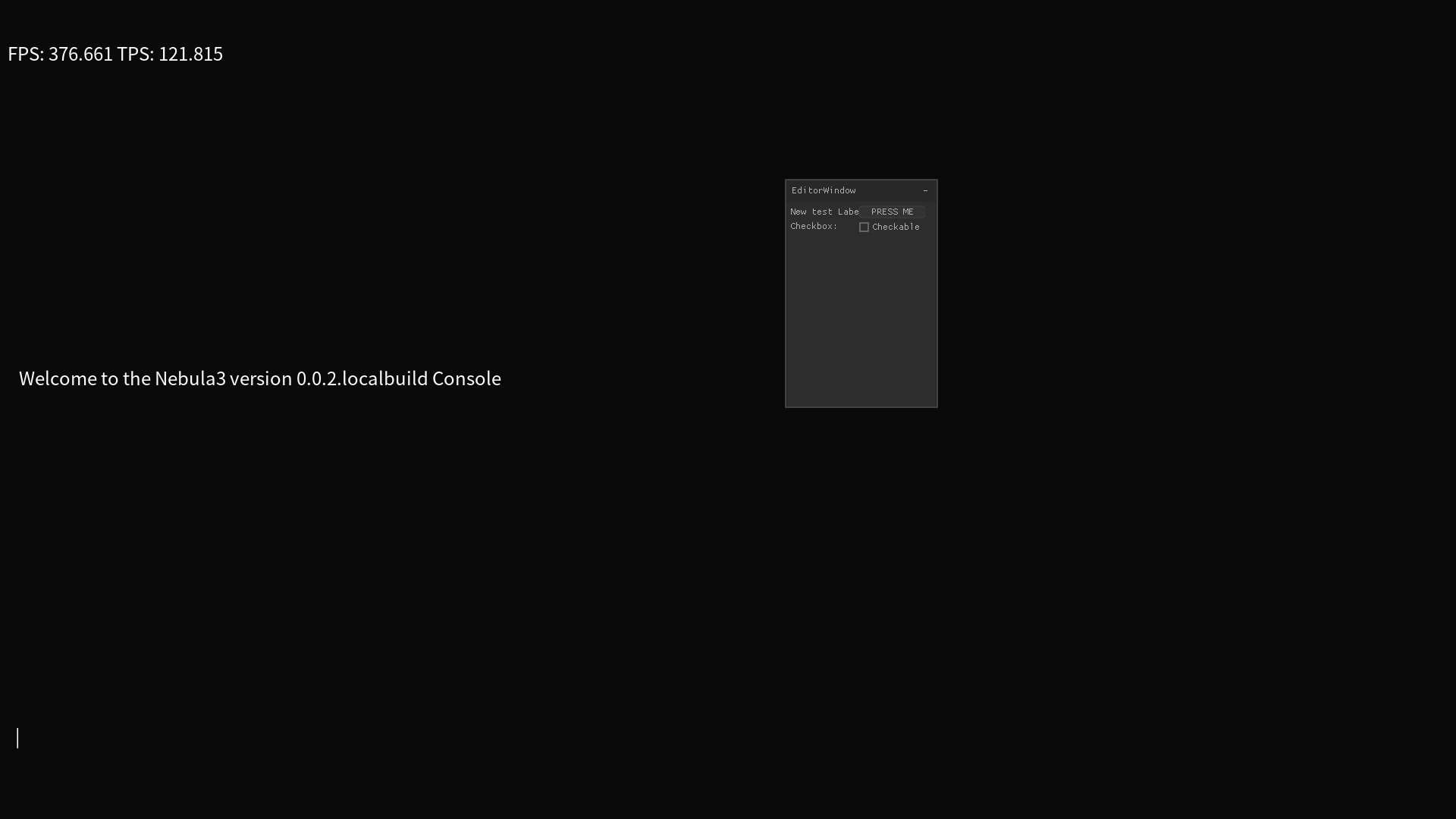

UI progress

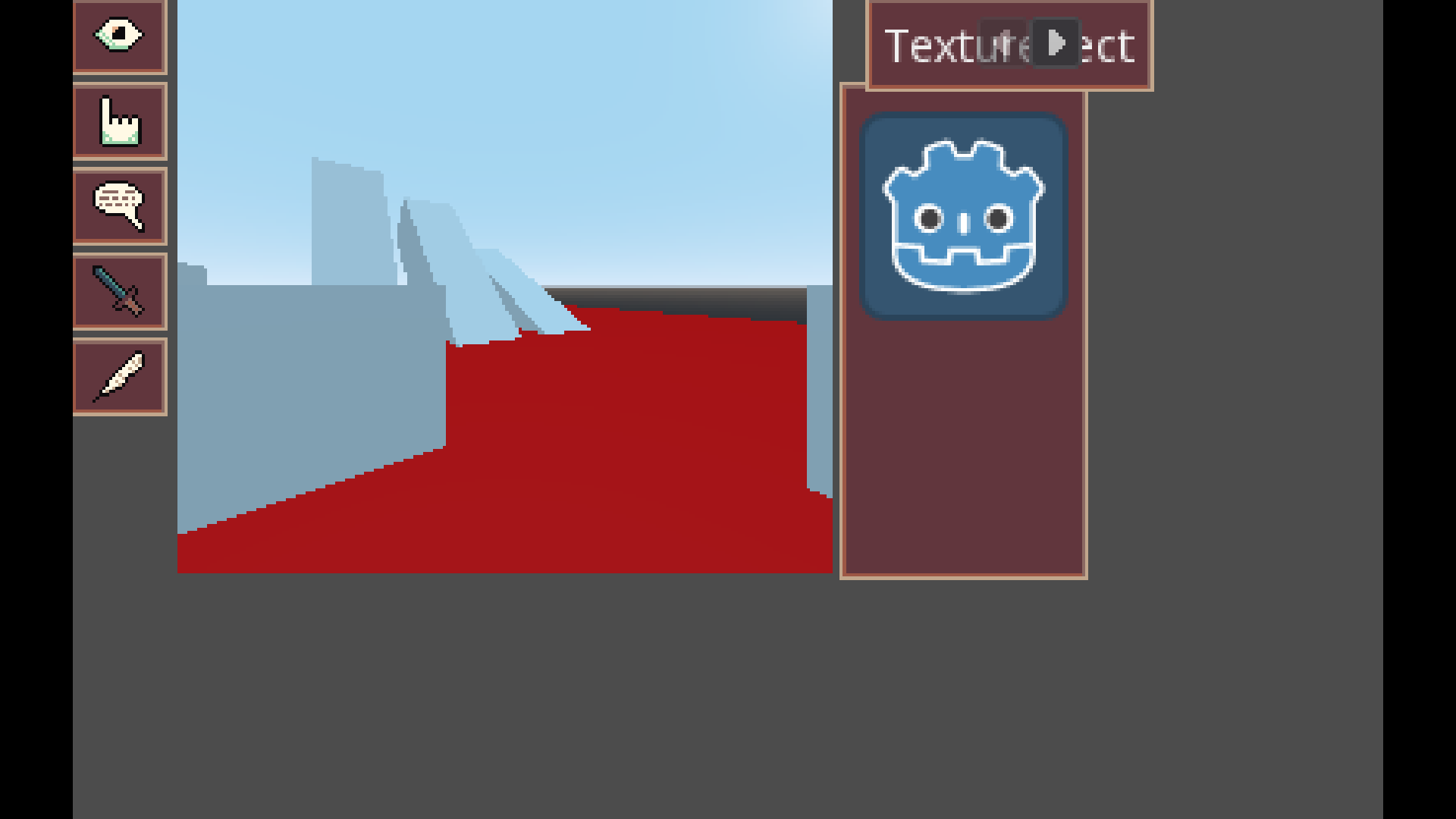

Had some troubles with porting the Nuklear examples, I haven’t gotten Lua bindings or anything together for this just yet, I basically just ripped the example nuklear rendering code for opengl out of an example, and shoved it into my own little wrapper class, and now I have a window like this:

I consider this some pretty good progress!

Okay the title bar is missing from that one, try this one:

This is great! Basically proves that my UI rendering works, along with the rest of the nuklear pipeline (it’s kind of a lot of setup stuff, very confusing, especially if you want to tweak the UI colors, use images, skin it, etc). I haven’t tested any inputs yet or that my code to shove input through to Nuklear works, but this is a great start.

Next I need to figure out how to store rows/UI widgets in my windows, and then also come up with some sort of Lua API to specify what the inputs should be, as well as get the values/inputs out. that’s likely going to be a bit rough I think, but I’ll see what I can do. After I have basic widgets working and lua bindings together I can then work on fleshing out more of the widgets and then also putting together a better editor UI, then I can really get going on stuff.

-

some art stuff/side project

I decided I wanted to test out Godot 4.0 Alpha 2 for a little bit to see what was new. So I also spend some time in blender making a few things.

I will say that I don’t think I’m ready to work in Godot 4.0 yet, there were some issues I had importing models to where I’d drag in/add the model to a scene, but the mesh would not show up all of the time. That difficulty made it difficult for me to continue doing just because it was not reliable and I think I found a workaround, but the workaround was too much work for it to be worth it for me to continue.

I did however do some stuff in blender and made this candle model with fully procedural textures (that I plan to bake out and export for my engine, my engine can’t do procedural textures and I think that’s not in scope. for now.)

Engine progress

Not much progress on the engine from last week sadly, partially from being distracted with the Godot 4.0 Alpha 2 build testing I was doing. Some small amount of progress on the lua bindings for the UI stuff though.

other stuff.

Lots of other things going on, trying to buy a house, and it has a bundle of things wrong with it that we need or want to fix/repair before or shortly after moving in.

Water heater isn’t to code and is unable to be replaced without cutting whole in the drywall, failed radon test, dishwasher doesn’t work, garbage disposal doesn’t work, whole house fan vents don’t open properly, tree needs branches removed, yadda yadda yada.

But hey! it’s a house and it has lots of storage. like fit our 3 crock pots and the instapot under the counter and have room for like 6+ months worth of pantry items for us kinds of storage space.

I’ve also realized just how expensive furniture can be… we have a big enough space, and I’ve always wanted to have a bar in the house we would get. Issue is, bar counters and bar cabinets all run well into the $3k range each for the nice ones with reasonable size, and we’re going to want one that locks because I have a 13 (almost 14) yo brother-in-law who we like to have visit, and we don’t need him experimenting with that stuff without our supervision. I told my wife we’d have to get just the best farthest bottom shelf cheapest tequila for him to try if he ever asks about trying alcohol, can’t let him go come across the good stuff while we’re not there otherwise he might end up liking it.

So because of all that expense, cost, and that we want something that locks, I’m considering making a bar cabinet (and probably bar too) myself. I did a little bit of wood working growing up, mostly with my dad since he was the one super into it. I’m not sure I’d be into it enough to get like a tablesaw or anything fancy, but if I could do it with a few hand tools maybe, then I could probably slowly come up with a bar cabinet.

I’ll probably start with looking around for plans or coming up with plans for the bar cabinet in freecad. Maybe I can 3d print some of the hardware myself and then assemble the rest of it out of plywood or MDF. Be on the lookout for some CAD stuff in the future I guess instead of just game dev.

-

Engine progress

Not much to speak of this time, still slogging through the lua bindings for the UI stuff. I now have it so I can do all the same things before you saw for showing a window, but in lua scripts now.

Still no widgets or anything like that but at least now I have somewhat parity between the C++ and the Lua bindings.Next I think is implementing lua bindings to do the Rows, which are basically to hold each row of ui elements in the window, and then I’ll need to add the C++ and lua to handle any actual input widgets or labels or progress bars and other such things.

Don’t remember if I’ve explain it before so I’ll explain it again, basically the way Nuklear seems to work, based on my possibly limited understanding, is you essentially have a “window” which is essentially a bucket that is a separate window in the UI, you can configure it to be closable, movable, minimizable, resizable, etc.

Then in that Window you have the widgets, but they’re laid out or organized in rows. how exactly they are laid in rows can be changed based on how you want to layout the gui, but basically what I’m planning to do is to have a Window object that just holds Rows, then have the Rows actually hold the UI components themselves. that way I can programmatically generate the full window and specify layout in lua, and all that happens is my UI Manager calls draw on the window, the window calls the nuklear methods to set up the window, then calls draw on its rows, and then each row does the call to set up the layout, and then calls draw on its widgets, which then just does the nuklear calls to set up the actual widgets (and will then get the input results from Nuklear and then ping back into the configured lua script/method with the input from the widget)

Engine testing

I was reading a factorio blog post recently and they mentioned doing automated end to end testing, so I thought it would be good to think about how that could be done, perhaps there are some other frameworks or something that could be used.

I do think that basically having a way to provide fake input to the engine would be easy enough by just adding some stuff to the input manager, to allow triggering input actions outside of needing glfw to actually register the events. I haven’t fully fleshed out the details of what kind of scripts the tests would be written as, but I think it would be helpful to start at least figuring out what engine modifications might be helpful to doing automated tests.

Things like being able to run in a headless mode, that way you can avoid needing to use GPU and still be able to run multiple tests in parallel on the machine.

Maybe having some sort of “on demand” rendering to allow taking screenshots when needed but otherwise just don’t render anything? I’m trying to figure out how useful automated tests would be for testing actual gameplay vs testing just general engine features to ensure there are no regressions, and I suspect taking screenshots may not be the most useful, because I could imagine that the graphic quality would vary a lot at least while a game is being worked on, but maybe if there was a consistent test case for the engine to verify that the graphics rendering seemed to match at least somewhat closely between different renderers?

Additionally, It might be worth being able to capture state/save the entity/component state to compare, then comparisons of state could be done possibly between specific entities that are having functionality tested on, and it would work regardless of the graphics changing.

So those are some thoughts I had about potential features that might need to be added to facilitate writing automated tests, so hopefully then during development of a game, I could set up some automated tests for that game to test some functionality, maybe to verify that the main menu, pause menu, options menu all work and don’t crash, and then I could maybe add them to all run in Jenkins to be automated like once a week or month.

Next steps

Moving next week to a new place. lots of stuff going on for that, and not so much game engine progress expected. But once we get the place set up I’ll probably be right back at it trying to get my UI stuff figured out so I can get the editor on track. Once the editor is on track I’m hopeful I could start making some real games perhaps, even without fully workable physics or audio.

-

New place

Took a bit longer to complete the move and get my desktop set up than expected, but had an unexpected funeral and road closure keeping me in a different state, so the one week I expected to not have any progress turned into 3 weeks.

Still trying to set up my office, need to set up the 3d printer and better route the ethernet cable from the kitchen on the first floor to my office in the basement. I got a 500ft spool of bulk ethernet cable to be able to route that around everything along the walls, and was going to 3d print brackets to hold it in place.

In order to better route the ethernet cable I’m designing some brackets to use to mount it to the wall so I can 3d print them off, figure since I’ve got a house now I may as well make use of the 3d printer to make things.

The bracket is basically just a half circle with a little hole for a nail to go through:

but I made it parametric in FreeCAD so I can change the size of the hole to fit different nails or screws, and the size of the arc to fit different size cables, or the thickness or overlap of the parts to make it more sturdy if needed. Learning FreeCAD has been good, and I think it might help if I wanted to make furniture or other things that may not be 3d printed, but require good design/planning ahead to make sure everything fits together. Blender is nice for 3d but not sure it’s really parametric in the ways I need to make physical objects.Game Engine

The status on the game engine is pretty same-y same-y, still doing UI Lua bindings and stuff to try to get it to where I can at least add a single widget to a row and add that to a window.

Once I’m that far implementing other widgets shouldn’t be too bad since Nuklear pretty much pre-cans all the hard parts, so I’m hoping it should be smoother at that point, then once I’ve got a few widgets in I should be able to get some sort of editor UI going and then hopefully from there it’s a simple verifying the model loading stuff was ported properly, maybe dress it up a bit to make it easier to fit into the current framework of how meshes work, and then I should be set for the basics of things.Then I’ll tackle animation, physics, audio, etc in some future milestone, but I could feasibly start making levels/logic/something once the UI/model loading stuff is in. UI styling might need to be done at some point to make it not look awful, but that’s a longer distance worry for now I think.

-

Engine Update

Been in a little bit of a rut with the engine where I just have a slog of things left to write, make bindings for, etc for the UI stuff.

So I haven’t been super excited to work on the engine between that and all the other crap going on, so I figured it may make sense to start a side project to do something to still do coding/crap but make it so I can come back to the engine with a fresh mind, like how I worked on that dungeon crawler ages ago for a few months.Effectively nothing has been finished since last time other than making a Label class and lua bindings for it, and filling out the implementation using nuklear, but not testing it. Now I’m trying to do a button but not really excited about it.

New Side Project

So the new side project I decided to do literally 1 minute prior to writing this, basically I was just on my computer clicking around the browser windows as one does when supposed to be doing real work instead, and saw a GOG window that I had left open on the main store page, with a sliver of a screenshot of a game called Hero’s Hour on it. (not sure why that link looks like xn–<name>-nw6e to me but maybe that’s a preview only bug?

Opened it up and I saw the graphics and I thought to myself “making a little top down world map view RPG might be neat, maybe jrpg, maybe not, not sure. let’s open Godot” and so a game idea was born. that is currently only maybe 5 minutes old and will probably die about 5 hours from now.

Anyway figured I’d stop losing for that update, will try to write again next week on how long that new game idea survived.

-

Side project progress

Going to jump right to the side project, nothing to report for the engine

Art

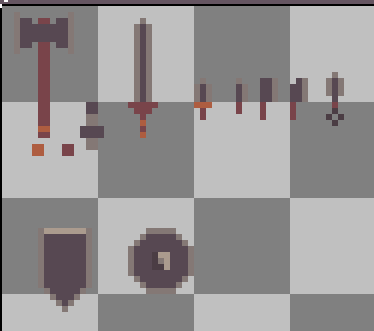

I’ve got a tileset I’ve been working on, forgot how fun it is to make a pixel art tileset:

The tileset has grass, dirt, sand, dirt/sand path, and paved path, trees, various buildings, and some mountains. It’s meant to have some tiles be on a layer over the top of the base ground layer, which may make the mapping a little bit complicated, but shouldn’t be too horrid.I think I’m going for more of a jrpg type thing, or maybe sort of like a roguelike, I’m definitely leaning towards using some proc gen (discussed below) for creating maps/overworld, etc, and I’m thinking turn based will be best.

I’m also not aiming to have different heights/levels in the map, that’s just too complicated art wise and mapping wise, but I’m sure it can be faked with setting up tiles in a certain way.

Tech

So I’m planning on using proc gen to generate the map, instead of manually placing tiles, that’s nice because then I can use it to create:

- Replayability, you can play the same game multiple times with different layout, different world, and different experiences

- Larger worlds than I care to make manually, which is great because making large world maps, or lots of small interiors sounds like it could get very tedious, so this way I can make a larger world with more crap to do, without spending too much time on all the individual crap, I just make a tool that makes each type of crap and then another tool to tell the crap where to be crapped out at.

I plan to do at least some of this proc gen, for the world map at least, using Wave Function Collapse. You can see one sample implementation and what it does here but I’m planning to make my own implementation in GDScript (for now, maybe I’ll figure out GDNative later if that’s too slow) so that I can understand it, and potentially tweak it if needed.

The gist of wave function collapse (WFC) as was described in one of the several videos I watched on it, is that basically it’s like solving a Sudoku puzzle, you start with a few pre-chosen tiles, either randomly chosen or given to you, and then you go through all the other slots and you can narrow down the options remaining of what value/tile can go there, until either you get to only one possible tile, or as few as can be, then you just randomly choose on from the options that can be there at that tile, and then you update the rest of the slots with the options remaining.

The way you know what tiles can go next to each other is by defining the possible adjacent tiles to each tile, which if you look at the above github repo, can be done by providing a sample or “training” tilemap/texture, which let’s the algorithm know how/where to put different tiles in relation to each other.

One thing to note about WFC is that it is all based on local connectivity, how each individual tile connects to its neighbors, there is not really any stored/saved data about bigger picture things, which means you can very easily end up trying to make a generator to give you square rooms, but wind up getting non-square rooms, or other similar issues, simply because the WFC algorithm doesn’t really have any data on the inside vs outside of the room. This kind of difficulty can be solved though, by including a sample tilemap that uses a different tile for the interiors of each room, compared to the exteriors, that will keep the room interiors internal to the room and prevent the room from sprawling on.

How is WFC different from Wang Tiles?

Wang tiles are something I’ve done in the past and I think mentioned somewhere upwards on this chain, and I wanted to kind of mention how they seem similar at least in theory to WFC. WFC you define the possible adjacent tiles and try to pick a tile that can go in a spot that is adjacent to the others, based on edge connectivity.

In theory, you could probably combine WFC with Wang tiles and be totally fine, so what’s the difference between what I’m trying to do now and what I did before?

Previously, I did Wang tiles, which the ones I used were basically large blocks that were made up of many smaller tiles/pieces, in this case I’m trying to do WFC on those smaller pieces instead of having to make the tiles, and then manually create rooms/chunks with those tiles, I can just focus on making the little tiles, then piece them all together in an example map, and then let WFC do its thing from there.

Another difference between what I did earlier and what I"m doing now, is that previously I did not propagate any possible options through the map when generating a map, instead I defined the edges of my confined/finite map as being closed off, then randomly chose tiles to fit with that, and make sure that the connectivity of any other already placed tiles fit in with the connectivity of the tile chosen. Is there a reason you need to keep track of and propagate the possible tiles instead of just picking from pre-computed connectivity lists where you group all tiles with specific connectivities together? Not as far as I can tell, in fact I think having to propagate your options through would be slower computation wise, however, this lets you go through and find the tile with the lowest number of possible choices, and fill that in and propagate from there, so hopefully (and this is hopefully, I suspect it’s still possible) there will be fewer instances of the algorithm running itself into a corner where no tile exists that can fill a spot, which happened multiple times when doing Wang tiles, but meant in order to get more generation to work, I had to spend a ton of time manually creating new wang tiles to fill the connectivity combinations that I didn’t create yet, or deal with having a 4 way intersection wedged in there to not block off any paths.

I will say that doing the wang tiles I did before did mean I could just do random walk to make sure you could get from point A to point B by pre-defining the necessary connectivity without defining tiles for those slots yet, which let me create a traversable level from start to finish, but here for an overworld I think it should be possible to not run into too many cases where something would be untraversable, or if anything I can pre-define some parts of the overworld to make sure that things are traversable, but then just proc gen the rest.

Also in theory, with WFC I could make an infinitely generated overworld, and then generate each individual place on the fly. Which, that would be great and all but if I want like a classic story, and maybe some sort of sane leveling system, it may make sense to limit things a little bit to prevent things from getting too insane, although I’m not going to rule out quest and story proc gen just yet, but I think for a better experience for a jrpg type game, some intentional design is important instead of just letting proc gen go completely rampant.

Side quests though, that’s probably where I’ll let proc gen go because if the system generates towns/buildings/whatever, then the system can fill those with stuff, I’m not going to hand make everything that is like that. Proc gen for weapons, tools, items etc will probably also be useful.

Next Steps

Next steps on this project, I think getting the WFC actually working. I’ve rambled on about this, but so far I have a file in Godot that doesn’t do much, it stores out the adjacent tiles from a pre-canned 2d array so it knows how to connect things, but it hasn’t figured out how to use that to create anything just yet, and the propagation of options/narrowing down the options is going to be a little bit intense.

So far I’ve been enjoying making the tileset so that’s good, may make some sort of at least placeholder character for now, or maybe go through and make some items or ui features for later.

After the WFC generates the array of possibilities I think it’ll be a matter of getting it to create the TileMap and then putting that for someone to see, then making it so you can walk around in there, have collision, etc.

-

Side Project progress

I decided to spend more time making art instead of getting the proc gen all figured out, so I also made some item art:

Basically went a little bit overboard making random items, trying to have a variety of variants for each item. I do get that I could just do palette swapping or other shader tactics to get different colors, but I decided not to do that for now, this way all color variants are strictly using the dawnbringer 32 palette.

I also did some additional outdoor tileset art, but not much new since last post.

Proc gen