Let’s talk about hacking roms. Well, more precisely, let’s talk about NOT hacking roms.

I can do it, but I’m not great at it… and I find most emulator debuggers quite disagreeable. I think it would take me a long time to do some of the hacks I need to do. I have some workarounds that have proved quite versatile… they are MORE versatile than hacks, in some cases. And less, in some others. But they can be way faster than hacking, in some cases.

First, I should mention, classic game genie and action replay cheats are easier than hacks. Yeah, sure, always having 99 lives isn’t quite as elegant as it would be to simply never lose any of your 3 starting lives. And blinking invincible as if you just got hit for the entire game isn’t quite as elegant as simply clipping through bad guys without taking damage. But it does get the job done.

Moving on to more interesting things: I call them “input macros”. As a simple example, I have a game with a hidden mode that requires pressing select five times on a menu. Now, I don’t want to even have to expose the select button to the user as a binding. So what I do instead is configure the triangle button to set up a sequence that seizes control of the input and sends a series of five select button presses across several frames. It can get more complex than that. In Turrican, we have dozens of screens full of code doing this kind of stuff. For instance, to drop a bomb you have to crouch and shoot. Ideally we’d have a button that does this instead, so we have to crouch for the right (minimum) number of frames and then presses the button at the right time and waits for long enough crouched for it to register.

Another input-related thing we need to do is to fix menus so they behave in more sensible ways, using the console’s normal accept/cancel buttons (which varies by console and region of course, most noticeably differing between Nintendo and everyone else). This requires unhooking the normal input bindings completely and simulating a different kind of setup depending on whether we’re in a menu. Detecting if we’re in a menu… can be hard. In theory somewhere there’s probably a variable that says what game mode we’re in… maybe, maybe not. Maybe you can only tell by noticing that certain code only runs when you’re in a menu, and you can detect when that PC is getting hit. That’s too close to hacking for my tastes though. Here’s what I do instead: I scan the VRAM for particular patterns of tiles. That works for any screens that have some unique static elements. If that’s not good enough, and it’s a transitory condition, you can set up a state machine to catch transitions between menus.

Scanning VRAM is kind of more of a dumb thing. You do this, I do that. If you try to analyze the game’s programming, you can get into mind games really quickly. The game will be reusing code for something, or it will do every screen in a completely different way (that’s totally normal; each screen may have been programmed by a different person or be pushing the hardware enough to be basically a different program) so your reverse engineering workload ends up proportional to the number of scenes. But scanning VRAM is the same trick every time, and you can build tools to help you do it.

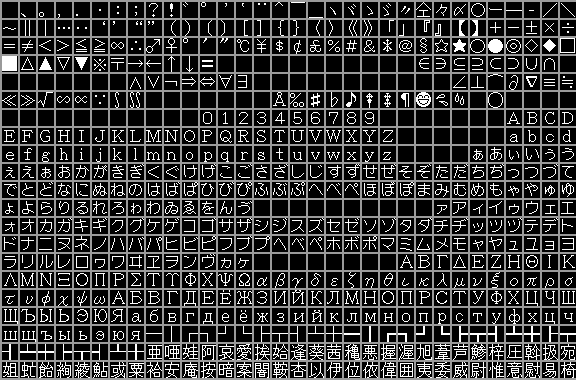

The final workaround I want to mention is the inverse of scanning VRAM – it’s modifying it! Suppose a game prints something static in Japanese and you want it to be in English. The tiles are compressed, the tilemap data’s compressed… you’re gonna crack all that, PLUS the code that draws it, and redirect it all somewhere? NOPE! Detect it, and replace it. This is easy if you have spare tiles in VRAM. But you don’t always…

I came up with a neat way to fix that which has totally worked for me on the PC Engine. It’s not directly scalable to the other consoles, but I’ll tell you how it might be (not proven yet.) The PC Engine is odd for having twice as much VRAM addressible for tiles as it has VRAM. So you can just park extra tiles in the 2nd half of VRAM and rewrite the tilemap to reference it. A big concern when patching art like this is whether it will fade out with the rest of the screen. You could patch it with pngs that don’t use the proper console palette, but what’s going to make it fade out? It can be really tricky (in an emergency you can fade it yourself by checking a known-white color in the palette).

Here’s the plan I have for other consoles (and I’m definitely going to have to attempt it on the SNES soon). Who says the hardware can’t have a separate bitplane tacked onto it in a way that the CPU can’t even access? I can access it from the emulation framework. So I will just “add” tiles by putting them in an auxiliary bank of vram and then set a bit in the separate fake bitplane which will signal the PPU renderer for the system to fetch the tiles from the auxiliary blank.

This is kind of an approximation of how consoles have extended their PPU capabilities before (the GBA and the NDS did quite a bit of this). There’s no reason you can’t apply it more generally, though. I feel sure you could do it on a playstation, for instance. In fact every HD textures approach for 3d consoles is working in a philosophically similar way. There’s additional resolution packed away in a buffer not accessible to the CPU (because it wouldn’t know how). I look forward to running into the problem on a PSX game, but I have to find a PSX game to port first…