Coolcoder360's Devlog/updates thread

-

I managed to get a jenkins build to actually complete with artifacts get zipped and archived. And then I realized that by building it with a docker file based on the alpine image, it wouldn’t run on any of my computers because alpline is musl based, and my linux distros are all glibc based. So next fun thing is to switch my dockerfile over to be based off debian instead of alpine, and hopefully that’ll resolve that issue. Because of course getting it to all compile as expected would be too easy.

And of course this means having to figure out all the package names again for all the dependencies, but for debian this time. So that’s fun.Once that’s completed then it’ll be on to implementing version numbers to print out the version number from the engine itself, and then maybe I’ll get back to the rendering or work on getting the dockerfile to be able to build it all for windows, and set up the jenkins job for that.

-

I bring great tidings of screenshots, and descriptions of how fiddling with renderdoc helped me figure out my problem with rendering not working, the tldr was that my shader was borked, and the correct line was above the incorrect line of my shader, but commented out

So we’ll start from the beginning, nothing was rendering at all, but of course it’s a PBR renderer and there’s no lights, so I thought there might be an issue with there being no lights to light up my test cube in the scene. Also there was an issue with not knowing offhand which way my camera started facing, so I had to hack in some camera movement to turn the camera, which worked on paper, but still got me a blank screen.

First I’ll back up a bit and explain how the renderer works, since it’s part deferred, part forward, and all pain in the butt.

So the rendering happens in a few stages, first the deferred geometry gets rendered to frame buffers with the diffuse, normal, metalness, roughness, ao textures just being written as is to textures on the framebuffer. Then after the geometry is rendered like that, the framebuffer gets swapped out to render to the screen, and the framebuffer with the data is then read to calculate the lighting in a separate draw pass.

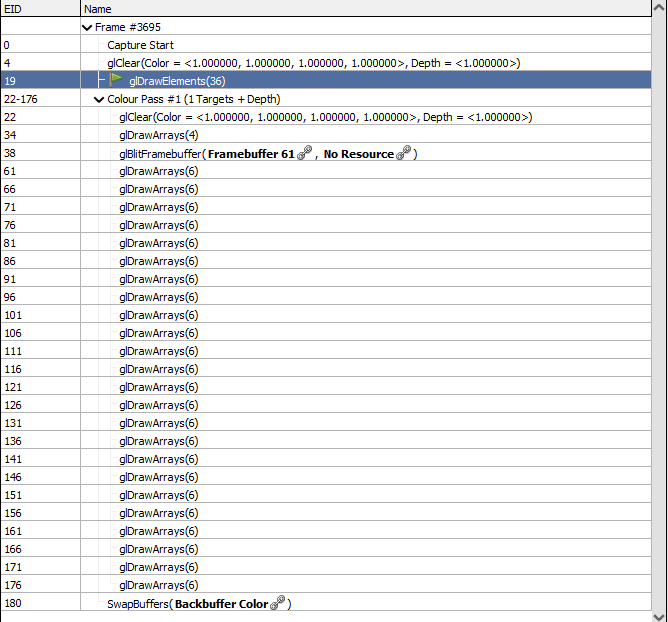

After that, anything transparent gets rendered using forward rendering, and then the UI stuff gets rendered on top of that.So looking in Renderdoc, we see here are the list of calls:

TheglDrawElements(36)is the call to draw my 3d cube, all thoseglDrawArrays(6)calls are drawing text on the screen for the fps counter (yes that is a lot of overhead for an fps counter ) And then that SwapBuffers is the end of the frame where it actually shows stuff on screen.

) And then that SwapBuffers is the end of the frame where it actually shows stuff on screen.So that tells me that its actually trying to draw something on screen, so my renderstates stuff works! yay!

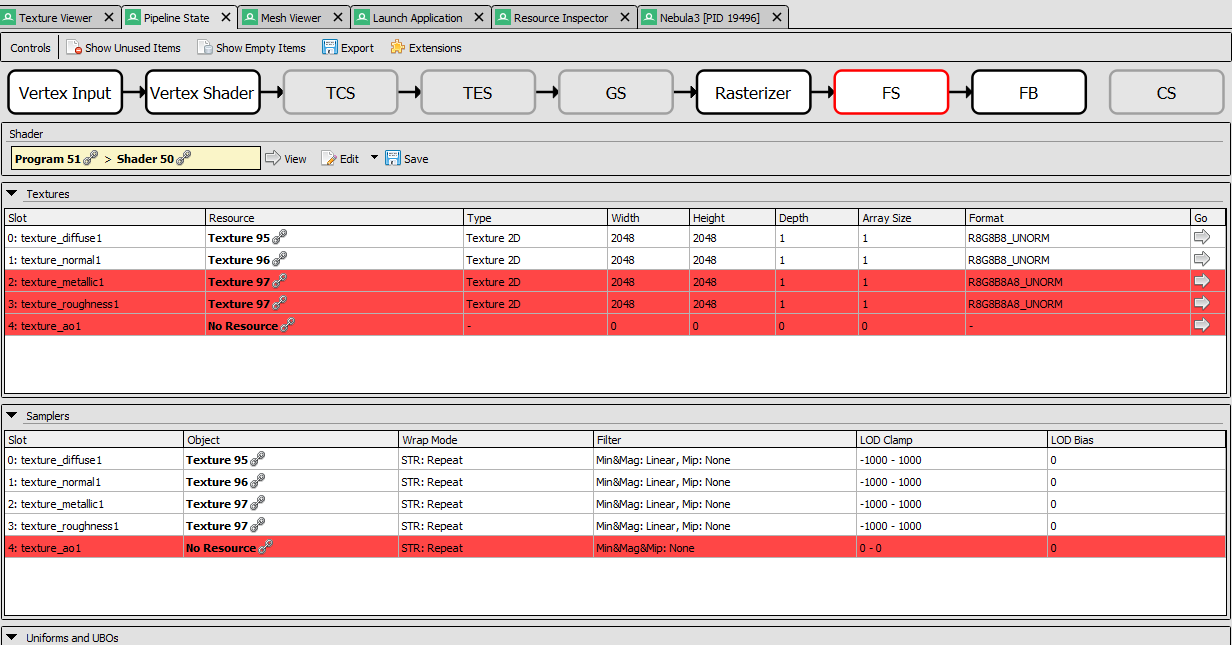

So then I looked further into Renderdoc, and me being me, I didn’t read a tutorial about it or anything, just started poking around, but the tab I found most useful was pipeline state and mesh viewer. First I looked at the pipeline state and saw this:

I knew that the ao texture didn’t have anything there, and I don’t expect that to cause issues, and I expected the metallic and roughness to be the same texture, but what I didn’t know/expect, was for them to show up as invalid textures.Lucky for me I had some logging information in my texture loading class that printed out the number of channels in a texture, and sure enough, the improper textures were single channel. The problem there lied in how I was setting up the

glTexImage2Dformat, I just needed to tweak the format and voila, that was resolved, but still no output.Next I went to the Mesh view and saw this:

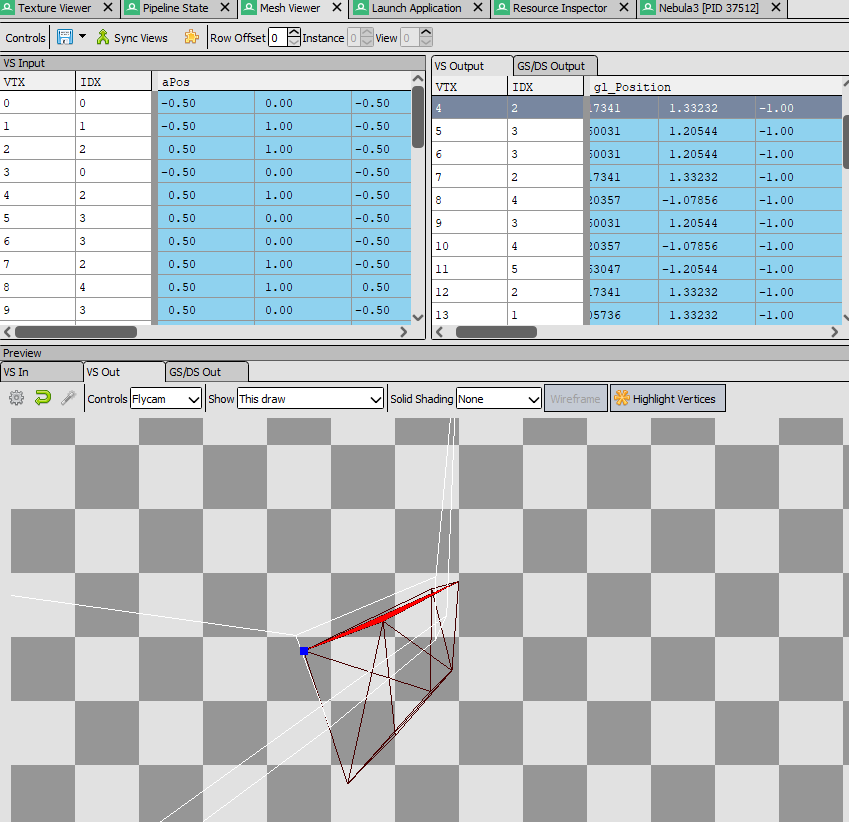

There are a few problems with this, first let’s look at thegl_Positionvalues.

gl_Positionis a vec4, that means it has x, y, z, and w parts to it, but here all the z values for all the points are-1that doesn’t seem right.

Also notice in the window below, the cube is there, but is behind the camera frustrum. That’s strange.

I did a whole bunch of debugging and random testing, moved the camera around and got it to be other z values than -1, but the mesh view still showed the cube as being behind the view frustum and it had a really weird distortion to the cube.

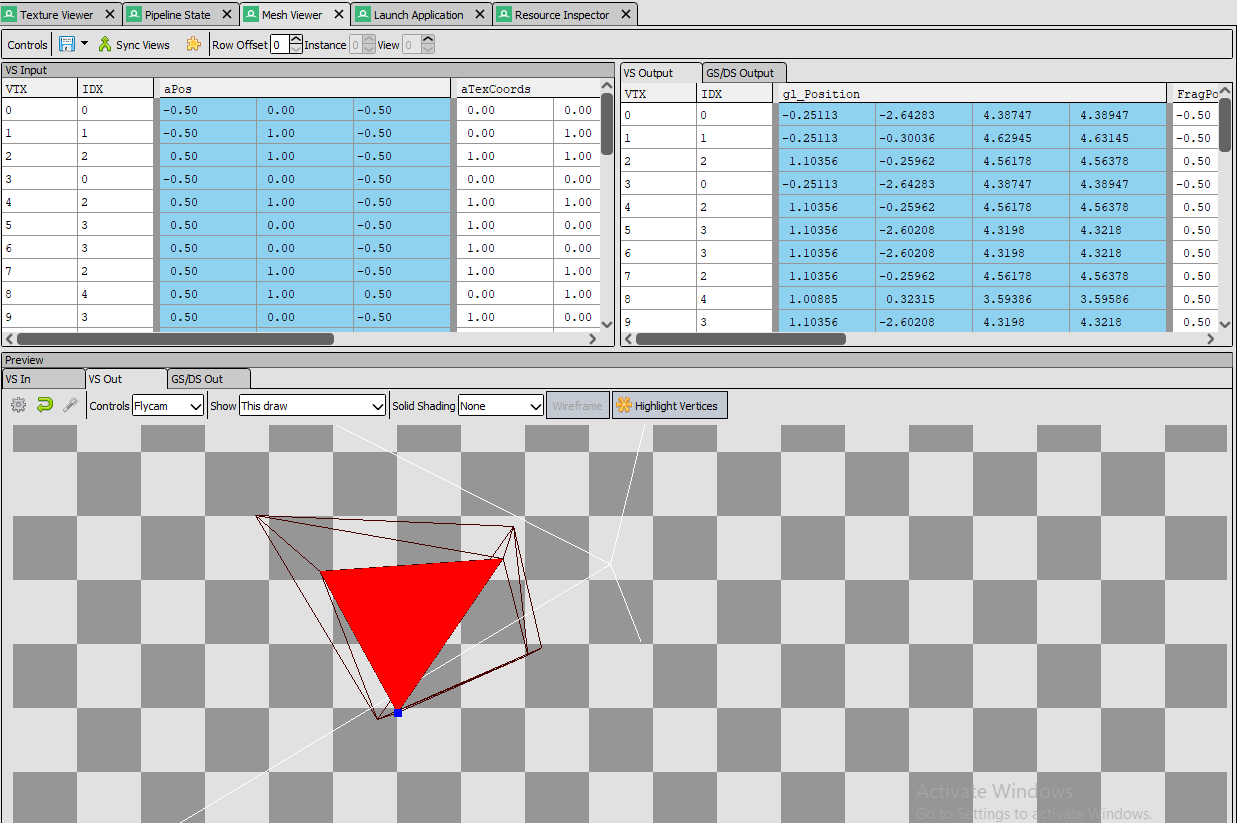

Then I checked my shader code and found this://#gl_Position = projection * view * worldPos; gl_Position = worldPos * view * projection;Now if you’re not familiar with matrix math, I’ve found the key thing to remember is that you have to do the operations “backwards” so instead of taking the object matrix, multiplying by the view matrix, then by the projection matrix, you have to take the projection matrix, and multiply it by the view matrix, then the object matrix. The order matters, and here I had the correct thing clearly written previously, and then commented out with the backwards order in its place.

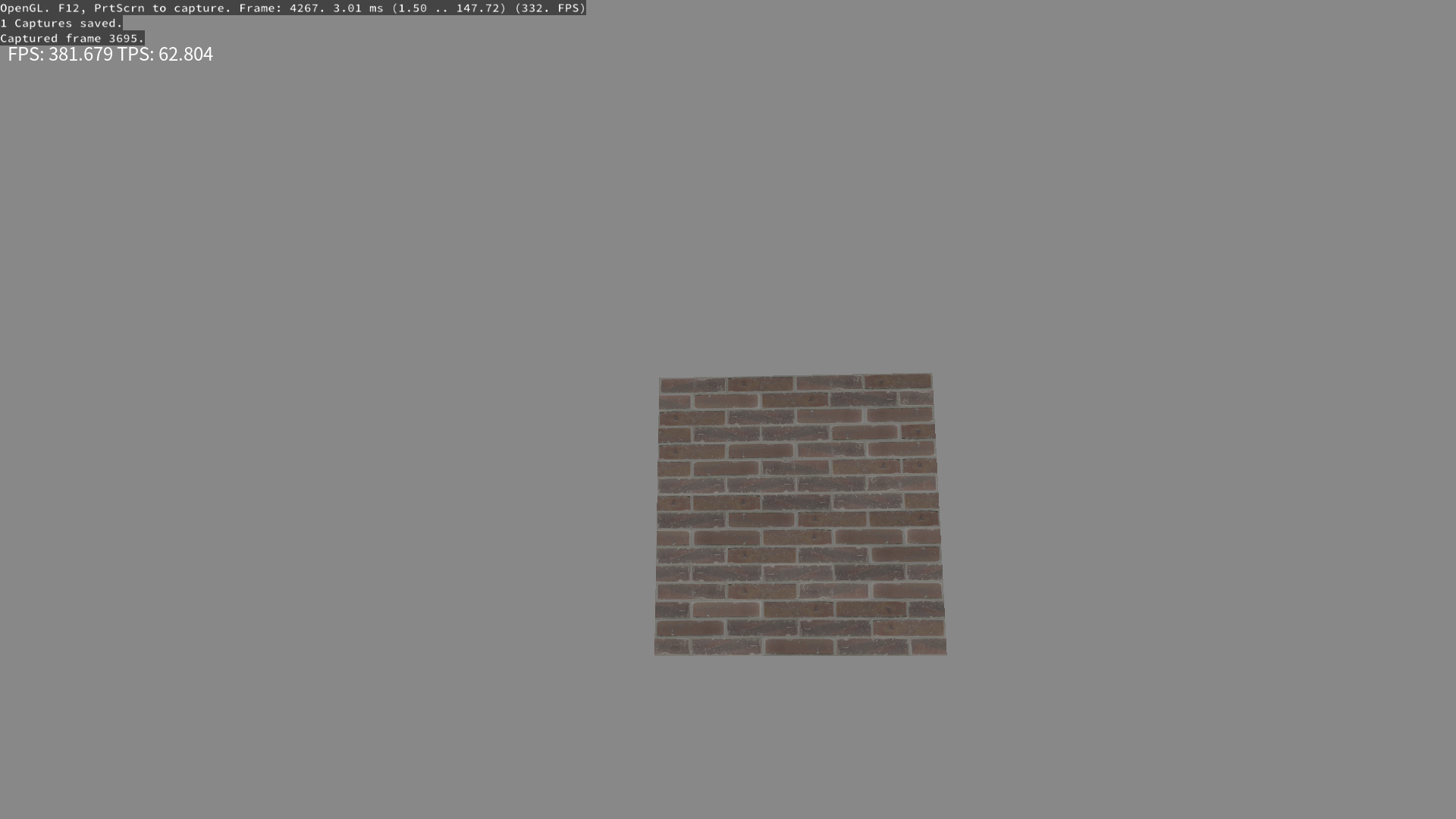

Commenting out the incorrect line and uncommenting the correct line then gets me this result in renderdoc:

And this result in engine:

I don’t have the camera movement working perfectly, the mouse looks around wayyyy too fast, and I don’t have position movement set up yet, but soon I’ll hopefully have more to show off. and maybe I can get the camera turned around to default to look at the cube instead of away

-

Admittedly I don’t really have much new progress in terms of anything being completed, but I have started on a couple different items, one being build version numbers, including a version specified from Jenkins, the other being getting started on lighting.

For build versions I’m not entirely sure how I want to do it yet, I’ll probably have it be where a prefix is hardcoded in the repo (like 0.1.0.), that way each branch can have a different version number, and then append the jenkins job number and maybe a suffix depending on what kind of build it is (ie if its release/beta/debug/test/whatever, potentially with a different suffix if its a steam build or some special build like that.)

This method of having a prefix hardcoded in the repo does mean that I’ll need to remember to update it every time I bump the version number, either when I branch, update trunk, or when I tag a release, update trunk, but it is how we do it at my work currently and I don’t want to have to pass it into Jenkins, because I want the local builds to retain that number so you can tell what branch a local build was on.

The idea is that I’d get a version number from jenkins builds of prefix+job number+ suffix, like

1.1.0.799_betaor something like that, and then if I made a local build it’d be something like prefix+builduser+date+suffix like1.1.0.coder2021621_debugso you can tell what branch/version tag you’re at, as well as the build date and that its a debug build.

I haven’t yet decided on the suffixes but I’ll probably do that after I get the jenkins/CMake all set up to put in the build numbers.For the lighting work I’m planning to do a full PBR renderer, which means I’m now at the point of figuring out IBL (Image Based Lighting). I already have/had point lights implemented for my PBR renderer but no IBL yet, and apparently you need to have your lights have area in order to make it realistic, so I’m currently doing a ton of research on how to do all of this and I’m not even sure how to store the info needed for an area light, or what info is needed.

LearnOpenGL has a decent tutorial and sample code on getting started with IBL, however it only covers having a single global probe/HDRI environment map, which basically means you only get IBL from from the skybox. In order to make your environment look realistic with IBL, you need to set up local probes, which of course isn’t taught in that tutorial.

The basic process for local probes is to capture from all directions at an artist/level maker designated point, and then blend that with other probes and the environment map. Issues to this include: how often to update local probes, since dynamic objects will need them to be updated regularly, also how to actually pass the probes to the shader. I think I get in theory how to blend the probes together a little bit, basically by having an alpha in the local probes that cuts off at a certain distance, so where there’s alpha 1 you use local probe, alpha 0 use other probes. And then there’s some fancy business you can do with blending the probes, or just take the closest probe’s input.

Once I get IBL working with probes, it’ll basically put me at early UE 4 levels of lighting, I have realtime global illumination planned for later on, I’ve been debating if it’d be better to just do that and skip out on this probe nonsense but I am worried about how to handle things for lower end hardware, and it is my belief that I could pre-bake the local probes with non-dynamic objects and it may be acceptable for lower end hardware to simply not reflect dynamic objects, or just supplement with SSR (Screen Space Reflections) somehow, but of course that would probably involve more blending madness.

Also I’m nearing what I wanted for my 0.0.1 milestone, all that remains is engine build versioning, 3d lighting, some more editor console commands stuff, and scene saving/loading which I have yet to start. Once I get all the features implemented I’ll probably try to make some sort of simple game to test it all out since I haven’t tested half of it yet, I’m thinking breakout clone.

I did have a schedule planned for when I wanted the 0.0.1 milestone complete, which was like 3 months ago, but I pulled things into it from 0.0.2 milestone, like a lot of the 3d stuff got moved because it was more fun than other things, so I’m basically planning to just skip/ignore the schedule for now and go with the flow.

-

Well the version numbering is still in progress, decided that I’m going to just not put in a date as part of the number because getting a date in CMake seems painful.

Still trying to get Jenkins to be able to pull out the full build number from CMake and use that to name the zip file, that way I can keep track of the artifacts better.What I actually wanted to talk about is based on a discussion in the discord related to writing print on demand/ebooks. I’ve dabbled in writing since I was a kid, but I’ve never considered myself good and I’ve always been rather engineering brained, so I’m not going to talk about writing itself, instead I wanted to talk about writing toolchains, specifically the open source writing toolchains available. I’m well open to discussions on what you use or other writing/word processing tools that you use, definitely start up a convo on the discord, it should be a great time

Yes I’m going to software engineering-ify writing books by saying that you use a toolchain to write/publish them, This mostly came about after I saw that the book publishing/formating/whatever you want to call it software I thought would be good to use doesn’t support exporting ebooks, that software being Scribus. Apparently this is great for print media, it has color/printing features, defining trim/whatever, and it allows layout, but it doesn’t export to epub, it mostly seems to export to pdf, and while you can turn that into an ebook format it isn’t pretty and probably won’t be reflowable, which if it isn’t reflowable, it won’t fit easily onto a lot of different screen sizes.

So what do you use? Just libreoffice? I thought about that and it does seem to be an acceptable option, but I’ve already started writing my current writing project on my phone in markdown, doing some more research on the Scribus forums I found a tool that I’d heard of before but never used called Pandoc which apparently is like the holy grail of typesetting format conversion. It can’t go direct to Scribus’ format but it goes to ODT which scribus can import so its good enough for me.

So what does this mean for me? Well I can just keep chunking along on my project in markdown, then later when I’m done with my writing, I can easily chunk it into a ODT, set it up with covers/cover art, all the copyright stuff and other heavier formatting stuff like table of contents, whatever, and then convert that puppy to epub with pandoc again, or import to Scribus to make a print pdf. Or I could even try to set up the table of contents within the markdown, or I can convert my markdown to LaTeX and set up a toc in there. So many options.

So how does this become a toolchain? Well, you can define a process of how you want the flow of your project to get published, say you do most of it in markdown, ignoring most formatting/page breaks/what have you, then you can convert it to ODT to go to Scribus for making a print book, or just go right to epub for an ebook, or take a stop in ODT to do more visual formatting and then go to ebook.

Then the next logical step is to have a CI/CD system in place to automatically generate your book once a week based on your current draft and don’t forget the version control.

Okay okay maybe I’m going a bit overboard, but I did want to share Pandoc as being a useful typesetting/word processing tool, this sort of thing can be useful for more things than just writing fiction. School papers would probably have been way easier to focus on if I just did markdown or LaTeX first instead of having to use word, then converted it later, and I did my resume in LaTeX, with this I could update my resume by converting it to something else and do the formatting differently, or what have you.

That’s all for this couple of weeks, hopefully next time I can have some game engine progress, maybe the version numbers will be finished and maybe I can have some IBL progress.

-

It’s been a while, lots has happened, but not a lot on my project.

I’m on the process of gearing up to do the IBL changes, but there are just a lot of pieces so I’ve been a bit demotivated from my game engine.

However, today I added a second Texture class,

TextureFto handle floating point textures, which will allow loading HDR textures.

The idea being that you load a HDR equirectangular environment map, and then I’m working on some code to convert that to a cubemap. Once that is done, I do some processing on it and I’ll have a general environment map to do the IBL stuff with.Just following the tutorial part by part for now, but eventually I’ll need to go off tutorial and figure out how to blend that with some screen space, as well as local probes.

That won’t be fun.I’m going to focus for now on putting in the equirectangular to cubemap code, and then maybe making lua bindings, then chunk in the IBL stuff. Once the IBL stuff for the general environment map is in, I’ll probably take a break from IBL and implement scene exporting/loading so I can brush past local IBL probes for now and get to my 0.0.1 release without worrying about too much IBL stuff.

Gotta remind myself to do baby steps, and that I have a ton of other scope creep types of items that will possibly be more exciting to do.Speaking of the 0.0.1 release of my game engine, that’s supposed to be the point where I could prototype a breakout clone game using my engine.

It is supposed to have these things working:- Keyboard, Mouse, and maybe controller input

- 3D rendering

- 3D model loading

- Basic Lua Scripting

- UI buttons working

- text rendering

Basically enough stuff to have a “game” where you have a play button, hit the play button, and then you press keys on the keyboard to move something around on screen.

Right now what is left that I have planned on my Quire board for 0.0.1 are:

- 3D lighting

- Scene saving/loading

- Version numbering

So I’m basically there, and technically, if I really wanted, I could just push off all this stuff to later and make the breakout clone now, but I really want to get the basic IBL stuff finished at least for now so that I can have it behind me.

Also need to implement scene lighting in addition to the IBL so I need to figure out how to represent lights in the scene, and then render them.

I’ve done that lighting in the past but idk how to represent them in the scene, and I’m hoping to support spot lights, point lights, and potentially area lights. -

Alright I actually have some progress to mention now! Decided that instead of trying to keep a weekly cadence, I’d do better by matching my posting with when I have things to post about, and that way I can try to use this to help keep my progress momentum at times instead of as a weekly workitem/todo list task that needs to be completed, it can be instead a “Hey I did xyz and I’m excited about it” kind of thing.

So first: I split the 3D lighting bullet for my 0.0.1 release into multiple parts, I didn’t have any 3d point lighting or anything implemented yet, and was looking into IBL.

So I split the IBL out to 0.0.2, which was the pain part, and I"m working on representing 3d lighting data in the scene via ECS, as well as filtering out that data to the render thread when needed. And Lua bindings for adding that stuff to the scene.That all works, only issue is I think in shaders or something.

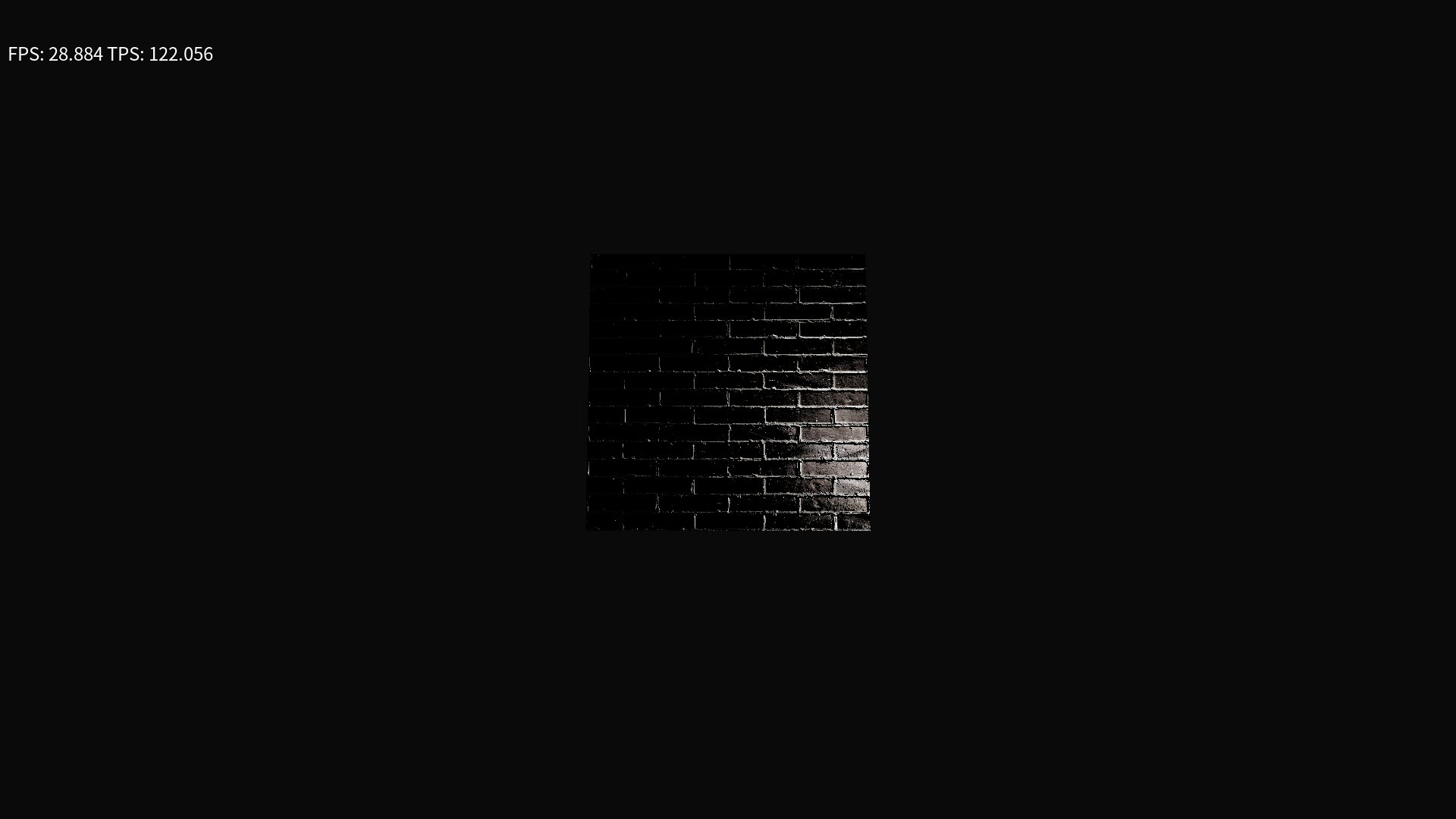

Behold what a point light looks like with the same cube I had before:

Notice anything strange going on here?

Let’s ignore the FPS: 28.884 part for now

It’s in black and white or something for some reason!Now I tested by having my shader output just “Diffuse” directly, so the diffuse texture is set up properly, so my guess is some issue with metallic, roughness, or normal shaders maybe? Not entirely sure.

I will say this would be a dope shader for a horror game, so I’m not entirely out of business here, but also I’m not convinced that the normal map is the right way round either.

Need to get renderdoc install on my Linux machine from the AUR so I can see the different textures, etc and try to figure out what’s going on. -

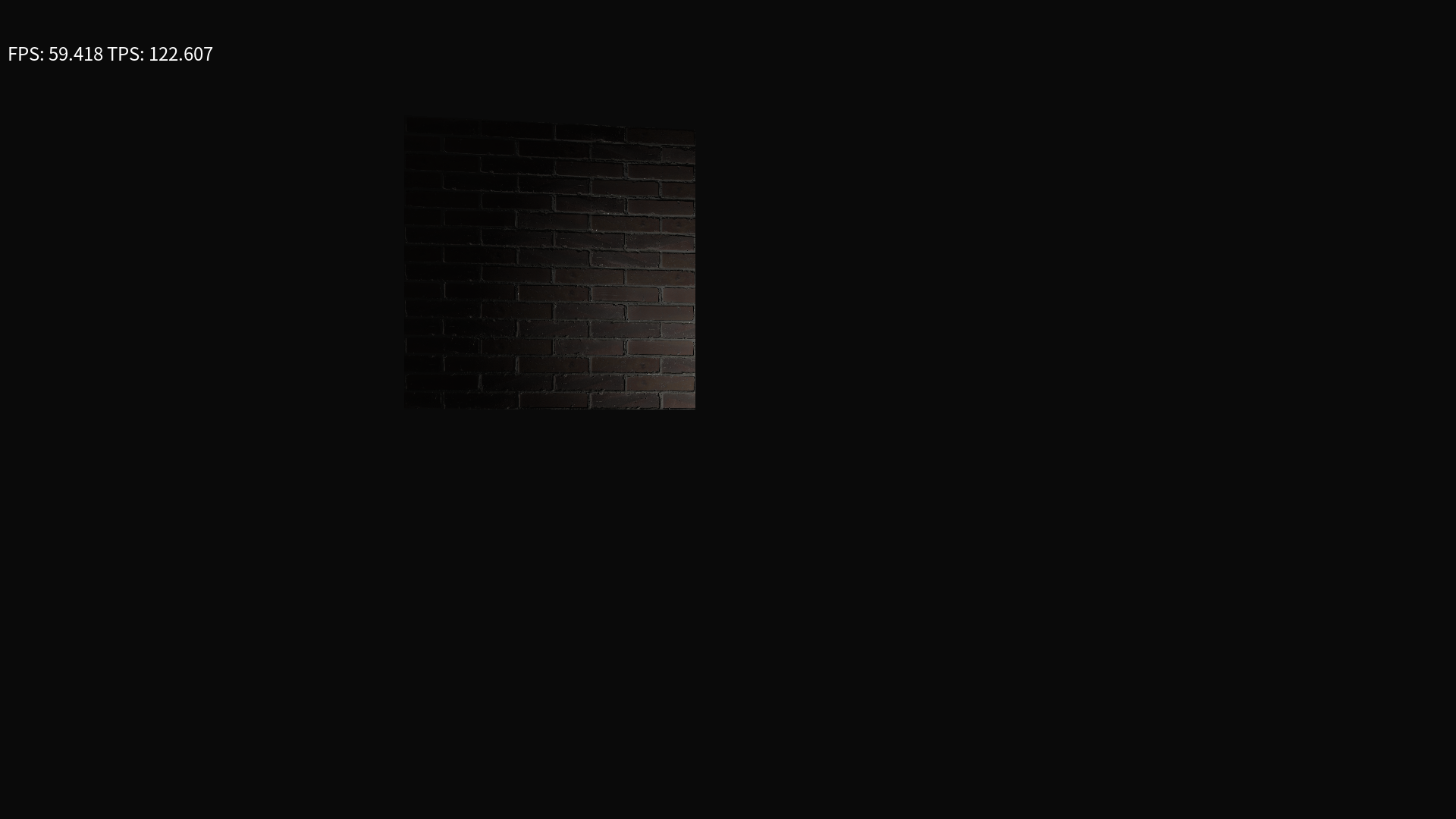

Gonna update here again since I fixed it:

So the answer to the problem was in how I was transferring data between my update and render threads, I had used the shaders before without issue so I wasn’t sure how it was not working.

See I send data between threads via loading up a

RenderStatewith all the data needed to render meshes, etc. Well I added lights to that rather sloppily and forgot to add a line to clear out any lights added to the data, so the number of lights in theRenderStateincreased every frame, and apparently when you get hundreds to thousands of lights added in the same spot, it did that weird thing I was seeing before.Part of what tipped me off is also that after maybe a minute or less the FPS would start dropping to nearly zero, and I figured it had to be something that was increasing or something every frame, and sure enough it was this leak causing the FPS drops as well as the rendering issue.

Problem Solved!

Now I’m thinking I’ll put IBL off to a different release, so I split off that to a different story, as well as spotlights, I’m going to leave “3d lighting” at point lights and wrap this up, so only scene saving/loading and version numbering remain, version numbering is part finished and just needs some Jenkins love and it should be finished. So close to the first milestone I wanted!

-

Some progress on versioning but I don’t think its totally finished yet, still want to take the version number and put it into the filename of the zip file that Jenkins spits out.

But on the saving/loading scenes front I’ve gotten a bit of progress, I chose a json library to use to save things out to json and started writing code.

Not sure yet if I like how the code is shaping up, but right now it makes most sense to me to have separate code paths for each scene saving/loading format, however I’m not sure I like that I have the separate formats in the SceneLoader specific code itself, or if I should have split it off to a separate utility to handle the specifics and I just broke the pieces up into different parts, so I’m sort of split on that since I suspect I"ll need to do the same shit for serializing other things should I need to do that, so we’ll see how that goes.

I chose the Pico Json library for doing the json, it’s a single header file json library, so easy to integrate and the API doesn’t seem to be too much pain to use.

Basically I just use a

picojson::value()to refer to a value, and if I want that value to be a JSON object I just make it be astd::map<std::string, picojson::value>and if I want an array, its astd::vector<picojson::value>. This is nice because then I can just makemaps andvectors as normal and it works, only issue is constantly wrapping everything with thepicojson::valueand I don’t tend to do theusingC++ thing to use namespaces, so I type every littlestd::orpicojson::out, and that seems like it’ll get to be a lot of duplicate typing, since I’m basically looking at having each entity be one JSON object, with keys for each component in my ECS, and then each component is also a JSON object, which keys for its values, which are sometimes also JSON objects.And there’ll be arrays thrown in there in some places too

But yeah so that’s my progress, started on Scene Saving and chose a json library. now the boredom of typing out code to save/load every single component. and then I get to do that shit twice when I add the binary save/load.

Maybe I should get an intern. Or come up with a better way to serialize this stuff.

-

well I guess it’s about a week-ish since my last post, and for consistency I should probably post again.

Not much to talk about on the game engine front, been writing boring json saving/loading code as per usual.

But I started a new project! hopefully it won’t turn out like the last time I mentioned starting a new project in Godot and then stopped working on it pretty much that same week.

I’ve been playing a ton of Tales of the Neon Sea lately, got it free on Epic and boy is that a fun game. It’s got some very beautiful pixel art, and it’s a story based detective/mystery solving game, with all sorts of puzzles and such. it kind of reminds me of a point and click game, but it’s also a side-scroller, and has some really slick pixel art and pixel animations.

I don’t consider myself good at pixel art, I consider myself passable at it. But combine that with being reminded that pixel art in 3d with modern effects looks super cool, and being also passable at 3d modeling, I’m now in the process of throwing pixel art into 3d scenes in Godot and hoping it sticks.

This is one of those projects where I start diving into art first instead of any planning or story, which means its bound to die within a few eons, especially since I’m currently in the process of hand making normal maps for some of my tiles. Very tedious stuff, and I have no way of knowing if it’ll look cool or not.

On the front of making the pixel art go into 3d, I’m not entirely sure on the method I want to use, Godot does support just straight

Sprite3Dobjects, which is basically a 2d sprite rendered in 3d space, and they can be animated like 2d sprites, and have the option to be billboarded. However there’s also this pretty slick addon for Blender I found called Sprytile which is free. Sprytile isn’t like, always the easiest to use or the fastest most intuitive, but it does let you make 3d models with pixel perfect textures, either applying the textures onto a pre-made model, or making models with the textures, and it supports tilesets. so you can basically make a regular old tileset, and then map that into 3d.i’m hoping to combine that with hand drawn normal and roughness maps in Godot, to make some PBR-ish pixel art so I can do some cool 3d lighting and GI effects to hide the fact that my pixel art is bad.

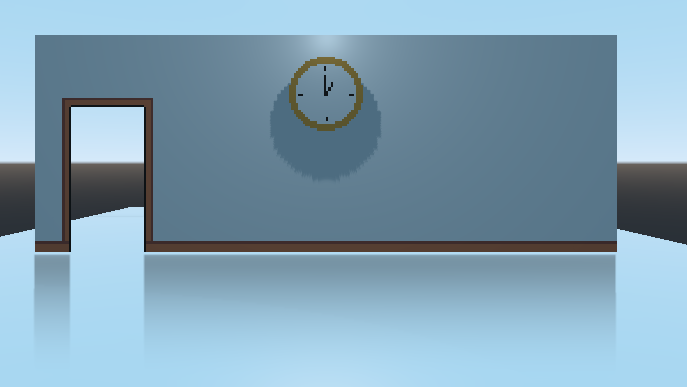

So far I haven’t done much, but here’s a quick peek at what I’ve got with a mostly default Godot scene, and a couple Pixel art assets:

Both the pixel art assets areSprite3Dfor now, and the floor is just aCSGBoxfor now, with a very reflective surface on it to show off the SSR. I’d put some reflection probes in but I’ve found they are kind of shitty for a direct mirror finish like this and work better with more uneven surfaces.I think the plan for if I ever make a character for this is to make a 3d model and render it out as pixel art in Blender, but for now I’ll probably just use a box as the character and try to get some type of gameplay down. I’m hoping that with more of a point and click type interaction it should be simpler to do, maybe some side scrolling segments too, but hopefully I can get some kind of gameplay that consists of interacting with items, and maybe popping up panels to interact with for like, minigame type puzzles.

I think once I get a basic prototype with working gameplay, it’ll be easier to continue from there and come up with art and story once that’s there. That way hopefully instead of having scope creep from making a massive story and then burning myself out on having too many potential coding features, I’ll be able to go the other way, do the coding and add the features/puzzles or the ability to have puzzles and do interactions, and then I can do the story writing and actual game design stuff once I have features that sort of work, I think/hope that workflow will let me not get super burnt out, or at least I can call it quits sooner based on being bored of the mechanics themselves, instead of being bored of making art.

-

Right so I haven’t done much of anything on anything this past week, other than a few small things on the pixel art mystery game prototype.

I added a character who collides and moves the camera with him, and I added a single object that lets you interact with it, and it’ll run a custom script when interacted with.

But I also wanted to mention that I basically joined a game challenge for it: the Crunchless Challenge. Since of course, what’s better to do with your half-baked idea than to join a challenge and impose a deadline on yourself?

The idea of the challenge is that it’s not a competition with voting, ranking, or anything like that, its more of a personal challenge to plan out all the other things than just chunking out the game, so by the end of it, I should have a whole game made, polished, marketed, and up for sale on a store page, without any crunching.

Posting Devlogs counts as marketing as far as the game challenge is concerned, so maybe I’ll be a regular at gruedorfing for the next few months!

Also since its not a competition, there’s no hard start date, you can resurrect old projects, use code/assets/pieces you’ve made before, etc. it’s more of like a prompt to kind of get yourself going.

I think the main difficulty here of course is going to be similar to all game jams, limiting your scope. I basically have a whole list of features I want in this game idea, and I’m going to have to cut it down to a reasonable number, and then like, write the story. Deadline is the end of November to have it all completed, polished, marketed, and up for sale.

I think my current plan is to just have a small subset of the features/mechanics I want in for that, and then just release a single part or chapter of the longer story in November, that way I can fulfill the challenge, get a demo/story hook out, and then maybe get some testing time on the mechanics I do have before I add more.

In terms of pricing I’m thinking a pay what you want model for the first chapter, or completely free. the idea being that it hooks you in to want to buy the rest of it.

Of course I’d also need to write a hook or cliff hanger or overall story plot thing, and I need to find a setting, I’m split between just sci-fi or trying to do some sort of period/historical setting, since that also sounds fun. My guess is I’ll need to decide that before the challenge technically starts, which is at the beginning of November.

-

Yeah I decided not to do the mystery/detective/story based game for the crunchless challenge, because I’m not fast enough at writing to be able to write a story between now and november to implement.

So instead I decided to go with a dungeon crawler roguelike idea, basically inspired by Nethack and Ultima Underworld.

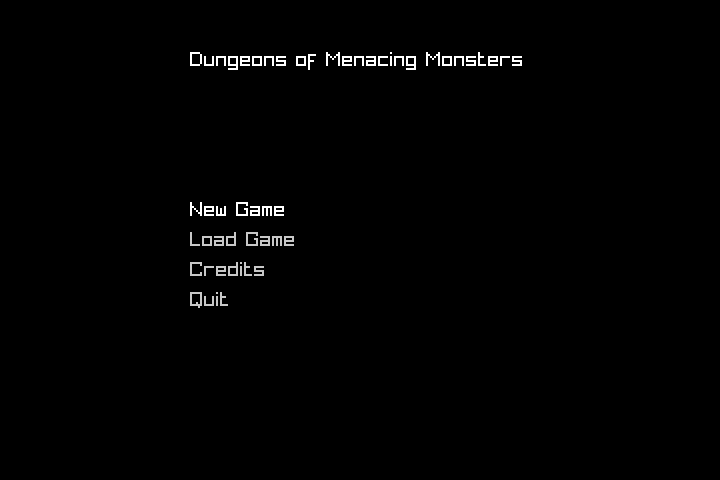

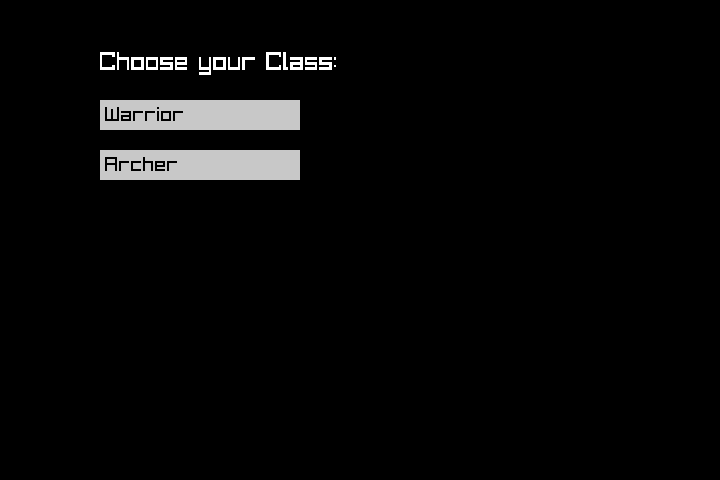

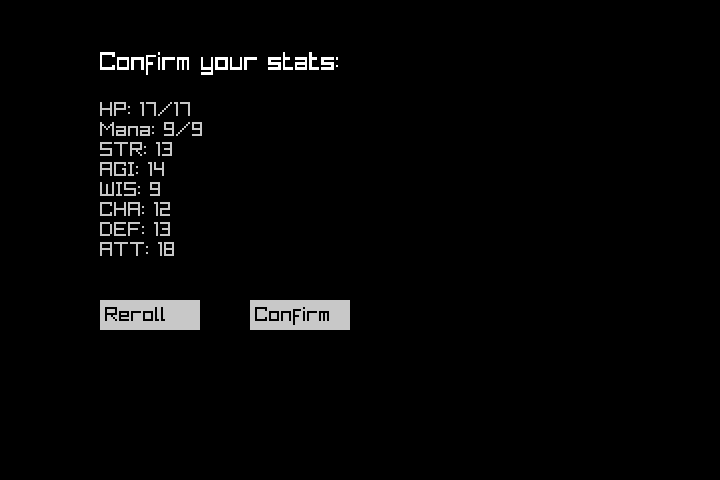

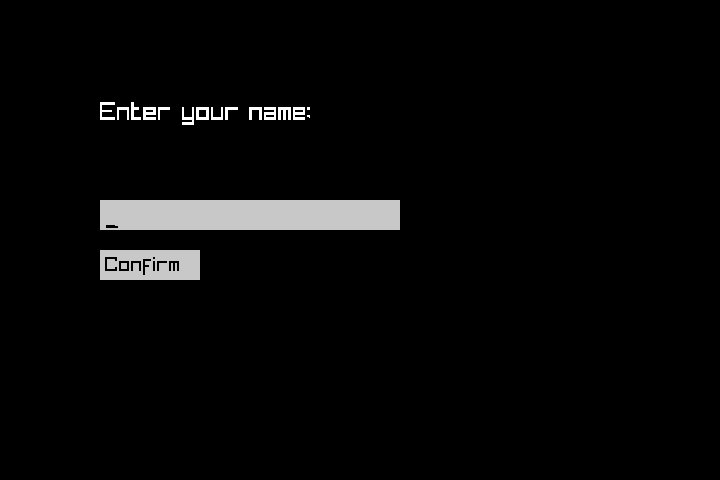

I figure if I can get world gen working then I can just make a crap ton of tiles and basic combat and boom there I go.Also decided to use Raylib for this project, which I’d never used before, and I accidentally slipped yesterday and basically got a whole main menu, character creation screens all done, and some basic character stat generation working, although I haven’t figured out how to have the RPG combat work yet so I might need to rehash that at some point.

Working on doing the map gen now, basically I plan for it to be done using Wang tiles, I would do herringbone wang tiles but having never done wang tiles before I think it’s better to start off with basic square tiles and go from there.

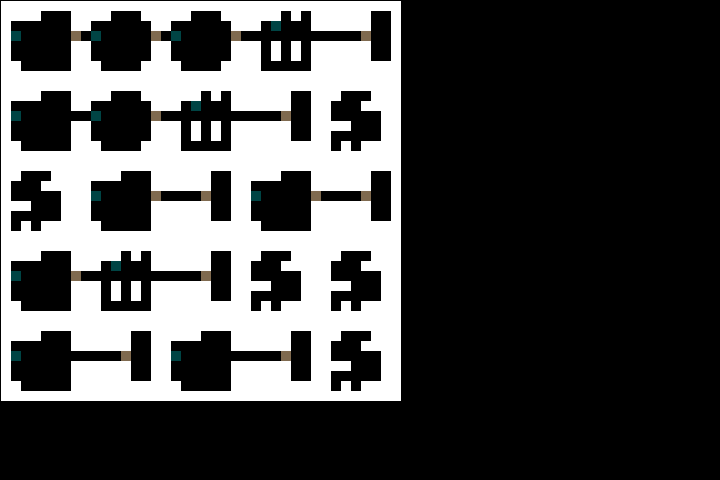

Here have some screenshots of what I got together after work yesterday:

Maybe the only thing I need to update/change, is that the main menu uses keyboard input only to select the options, and everything else is mouse only, so I need to figure out how to allow both keyboard + mouse for everything, but tbh maybe going mouse for most things makes more sense.

-

I managed to get a type of procedural generation implemented using wang tiles, I’m using square wang tiles and representing everything in a grid based system, which lets me easily input tile data manually by filling out integer arrays. So I pre-fill a bunch of tiles, and then I have a separate array which holds the connectivity data for each tile, saying what sides are meant to connect to other tiles and where.

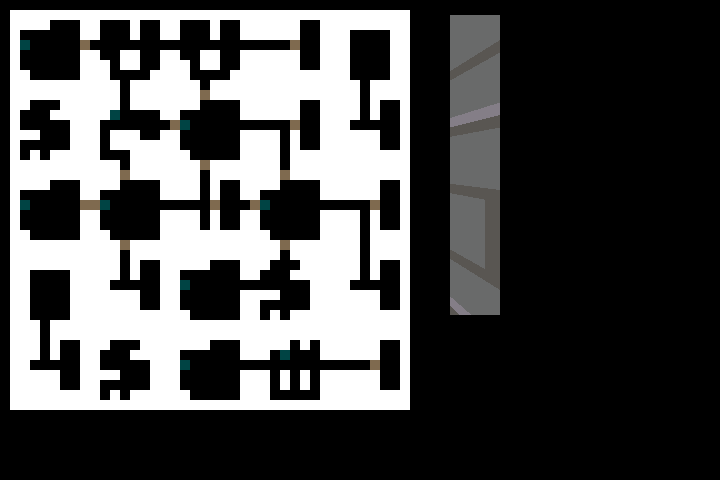

Using that data, I can generate reasonably okay looking maps, here’s a 5x5 wang map:

Basically I have it consider the edges of the map having blocked connectivity, then have it randomly select tiles from the set of pre-made tiles that will fit in that location. This is great for randomly generating a disjointed/disconnected map, and then I can also add some tile data for enemy, NPC, Item, door placement. In the picture above you can see brown squares where the doors would be, and a little blue square for where a potential stairway could be placed.

I haven’t figured out how to actually place the player spawn or stairway down yet, I hope for it to be like nethack, where you basically “spawn” at a stairway that goes up to the previous floor, and then you try to find the stairway going down to get to the next level, so ideally I’ll need a way to make sure that the spawn/upstair is connected to the downstair, so that it’s possible to actually make your way to the end of a level.

While I could implement some kind of wondrous pathfinding algorithm to do this, I don’t think that’s the best solution, definitely not the fastest and most bug free. I think instead since I already have it choose tiles based on the connectivity of the tiles that are already placed around it, maybe I could pre-define different connectivity maps for levels, so it could randomly choose a connectivity, and then it would know that any tiles that are defined in the predefined connectivity map as being connected are free real estate for being a possible stairway down or up.

The minor issue with this of course is having to make sure that all the tiles are also internally connected within themselves, which does limit the ability to have potentially interesting tiles, but I’m also thinking of potentially letting players just kind of, bash down the walls of the dungeon anyways, maybe with a pickaxe or a shovel or something. That seems like a solid way to help provide a way out in case the player gets stuck, and/or the map gen is half broken. Only issue is figuring out how to tell the player where to go, as well as how to keep them from escaping the map.

Okay and there’s also the issue of having to manually make multiple connectivity maps of multiple sizes, since I plan to be able to hopefully scale the floors to be bigger and bigger the farther down you get. Costs me no extra effort on my side to generate massive floors as long as it’s purely random generation with no manual connectivity maps.

Maybe instead of manually creating connectivity maps I just did a random walk for a bit instead? that would ensure some things are connected, would let me place tiles, and would mean less effort than manually creating the connectivity as well as allow for massive levels at no extra manual effort, and allow for more variety in map layouts than just the same 3-5 connectivities I would make manually. Plus it could totally wrap around on itself and have intersections if needed, but I’ve never done a random walk before and idk how well that would work to create a workable map, or how many steps it would need to have for different sized maps.

So that’s all sort of figured out, here’s what’s next:

- Figuring out classes

- Finalizing player stat generation for classes and generating starter inventories

- item using/management

- UI

- representing the 3d world

- combat

- I’ve never designed a turn based rpg and been satisfied with how the numbers are all used for combat, I’ve thought of looking into how GURPS and DnD do it, and then ripping them off, but I think that’s likely to run into licensing issues, and I worry it’ll be too similar to other GURPS or DnD based games. If you have any experience designing turn based RPGs and figuring out how to make numbers mean things, hit me up with ideas!

- coming up with some kind of macguffin goal to the whole thing

- seems like players won’t want to endlessly trudge through a dungeon, maybe having some purpose to go down there for, and then having to get back out or come back a different way would help add depth or something, but any likelihood of having a return trip would probably be stretch unless I can find a way to make the return trip fun again.

- finishing up map gen to generate enemies and items, entry and exit points

And some stretch goals:

- Shops

- I’m thinking similar to nethack possibly where items are laid out on the ground, you can pick them up and examine them, and then a shopkeeper stops you if you try to leave without paying, but items can be snuck out via teleportation or other ways, or the shopkeeper can just be disposed of.

- maybe some more story or something.

- pets maybe?

- in Nethack you start off with a pet, which is very useful because they will sometimes pick up items and drop them on their own, combine that with what I said above about shops in nethack, and yeah very useful to try to keep your pet alive if you can. Definitely try not to accidentally kill your pet, I’ve done that before.

- Deities?

- at this point it’s probably ripping off nethack too much and I may as well just crib nethack’s code and add 3d rendering on top of it, but hey it’s a classic for a reason.

Tune in next time for hopefully some kind of UI and/or seeing the world in 3D!

-

And consider the random walk method of improving connectivity and ensuring more of the map is connected to the rest working!

Much better connectivity and much better variety in terms of which direction the halls cross each other.

One caveat to this is that if no tile is found, then the default tile with no connections is used, that might be a problem since that can block off paths that may be wanted. Not sure if I want to make the default wang tile be completely connected or not, but that would prevent any blockages for sure, so then at least if spawn and stairway down are both on the random walk, then they will be connected.I also have 3d rendering working for floors/walls, but not really any of the other tile types.

Issue is that I haven’t done the player spawning yet, so you just spawn in the wall at 0,0, and can’t move out of there. -

Bout time for another update I think.

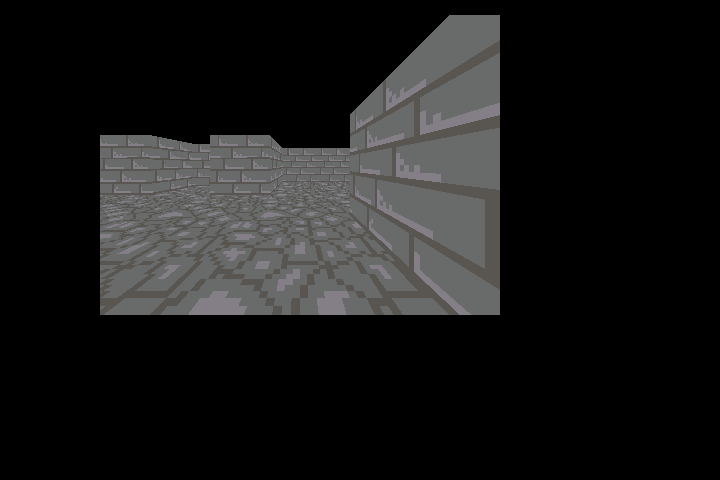

So I got the 3d movement and spawn/exit location picking working ish just after the last post, so now you can see what it’ll look like in game:

Yes movement works.

Then I also made the map look much better too with map zoom and scrolling buttons:

The reason I say the entry/exit location picking works-ish is because I’m not really satisfied with the location selection, basically right now it just chooses the tile, then loops through the tile to select the nearest “open” square, it does this because I didn’t bother to have it track which tiles have the spawn/exit values in them, so right now it’ll end up putting the player and the exit into the middle of a hallway.

You can see the player as the yellow circle on the above map, and the exit as the blue square.

I’ve thought about potentially having the map track/only update if you’ve seen an area, but that seems like potentially scope creep I don’t know that I care for, so I’ll probably look into that after I get enemies and combat working, since that’s like the next things required to make this actually be a game.

I think next time I’ll try to have more UI figured out, not sure I’ll do inventory, but at least the scrolling text showing what happened, or what is going on would be nice to have so I can potentially have debug printing in there instead of to console.

I may also see if I can set up a jenkins job to build this as well, since I already have a jenkins semi-working on my laptop, it has bad uptime, but if it works to build Linux, no problems there, then I might take a break from dev on this project to work on making a docker image to build windows builds from linux, which would be helpful so I can just press buttons to get both builds of this project. Since I plan on chunking this project out commercially prior to finishing up my game engine project.

-

Right so the start of this crunchless challenge that I’m working on this roguelike gridder game for is looming ever nearer, and so now the pressure of actually trying to plan/figure out if I can finish dev, market, release for sale the game by the end of November is starting to increase.

Based on the scope of what I want to include, including all of it in the initial release at the end of November doesn’t seem feasible. Also the price I found to be ideal for this type of game based on the bit of research I did for other similar games seems too high for what I think will be possible at the end of November, so I think my plan is to release in Early Access at a lower price, then release updates for a bit, bump it out of Early Access and increase the price to the price I found through market research.

So I spent a bunch of time putting some items into Quire, trying to organize based on need/want, tagging with type of task, and started organizing the initial release.

I feel like I’ve gotten a lot of stuff done with the level generation, etc, but wow is there a lot of stuff left. 22 tasks in for the end of november so far, and potentially more as more items are thought of. Luckily some of those I think can be cut from the release if needed, although all of the items are things that I think would be nice to have in a paid early access.I’m also adding in a bunch of other items to try to plan out one or two other releases after the initial release, I think if I want to ever kick this entirely out the door and get back to my game engine project I’ll need to wrap this darn thing up within a few months so I can re-focus on my engine, if anything cutting most of it and selling for less than the market research price i found seems like a reasonable action instead of leading people along to have a ton of planned features.

Of course releasing something for sale as early access implies that there will be possibly significant features to be added in the future and ongoing development, so I’ll probably want to define what is going to be added prior to release in order for transparency to any possible purchasers of the game. If anything I’ll probably go for the under promise and over deliver policy just so that people feel like they’re not being cheated out of something cool, and instead will get new things added in as they are available.

Due to that policy of under promise and over deliver, I suspect not making a statement on how much the final price will be is a good idea, that way it can be adjusted based on how much content is actually chunked out. In addition, cutting the early access price to be low seems good to ensure that even if nothing else comes out after the initial release, that the cost will be worth it.

I suspect adding new content as long as it isn’t new mechanics should be fairly straight forward, so I think there is definitely going to be at least 1-2 more releases after the initial release just to output more content to be distributed into the procedurally generated levels.

So on to describing the tasks and how I organize them.

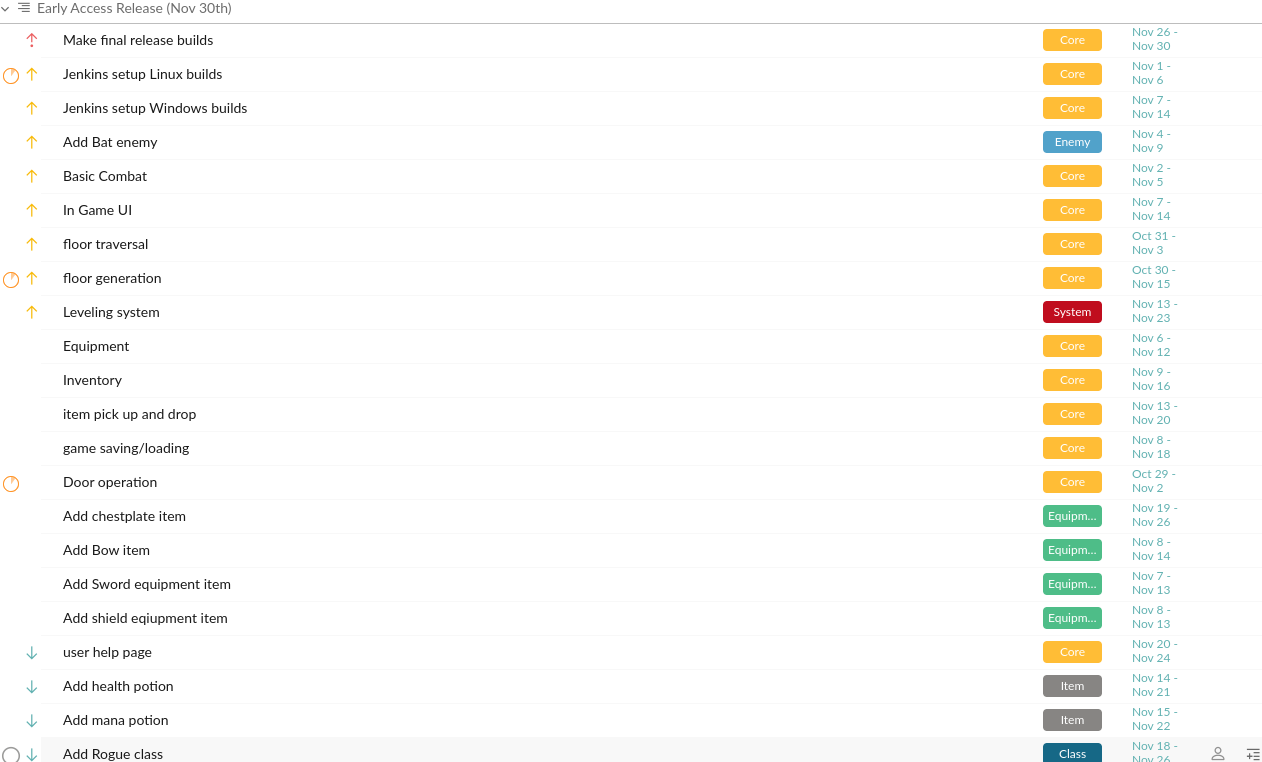

First I’ll give the disclaimer that of course none of this is committed to be in the final product yet or to ever be released this is just a working wishlist of items that would be nice to add.This is all of what I have planned to do between now and November 31st so far, with possibly more stuff to come (Note that testing or polishing isn’t really included here, this is basically getting a MVP out the door and for sale as Early Access):

Basically I’m organizing everything with tags to say what kind of item it is, if its got the “Core” tag it’s considered fairly crucial to the initial release and cannot or should not be cut.

From there I lumped it into a sublist of just things I want to do for the initial release, and then gave everything start/end dates to try to ballpark how long each item will take, etc. One issue though is I’m not properly pointing/estimating time, because there’s always going to be some variance in terms of how long things take or how often I feel like working on this project.

I also have a sublist for a proposed follow up feature release after the initial release but of course, that’s all mostly up in the air based on how long this takes and how long those features take.

Probably the item that will cause me to fail this challenge is of course, not feeling like working on it and playing on the Switch instead. I consider all of the programming work completely doable in this time frame with the caveat that it might be considered crunch to fix all the bugs, and the art is going to be rushed pixel art, so it’s not going to be anything fancy or take too much time.

-

The Challenge has started!!

I’m also devlogging this stuff over here on itch now too. Mostly so that I can hide the rest of my ramblings and meanderings and failed/forgotten projects away here

I’ll still probably update here on occasion but not necessarily once a week if I’m busy updating over there and then worrying about how I’ll get all this stuff done.

The Good news: I cranked out the door stuff and the floor changing stuff and a little scrolling text box yesterday! That knocks out like 2-3 items from that list from last update here. Also did a bit of prep for enemy generation on the levels, so that’s good but not there yet.

The Bad news: I forgot that the challenge also includes like, marketing and branding and crap and forgot to add those into my quire task list yet. So I need to figure out things like trailers, flashy screenshots, writing some sort of blurb and stuff on the itch page, etc. Or at least throw those tasks into my todo list and plan time for them.

Might also need to start tweeting again to like, promote this darn thing if I want to actually sell any of it near the end, but of course it doesn’t look pretty at all yet, and I don’t think it ever will, so maybe I need to not do that yet… Hard to say how to market something that looks like programmer art and will always look like programmer art.

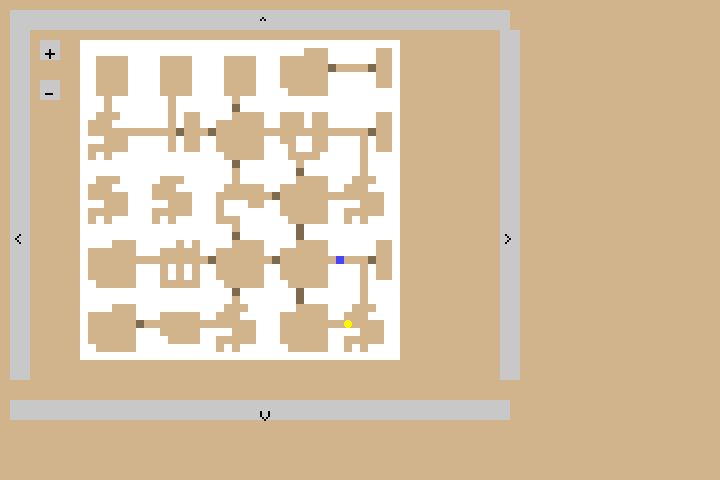

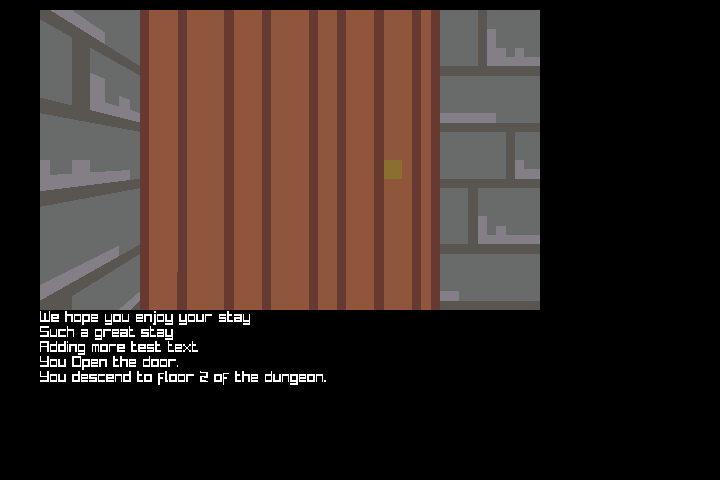

Here’s what it looks like now with a door, and some test text and some real text in the text log box thingy:

Next up is enemies + combat, as well as getting the Jenkins Linux build going.

And jenkins Windows build is planned as well, since I suspect I won’t get many sales without a Windows build

Also plan to have a compass for telling you which direction you’re facing, which will work better for using the map and knowing which way you’re facing instead of having to move around while on the map screen. (Which is totally possible and I’m not planning on removing as a feature, easier to let you move with the map up than to bother not letting you move with the map up) -

Challenge started 2 days ago, and I already got distracted with a different project which looks more exciting…

So my Invicta 8932 watch which I like, just got back in March decided to stop working, and now is only working sporadically. It’s still under warranty, but apparently is discontinued, so we’re not sure if we’re getting a replacement or refund, so I started looking into smartwatches.

I don’t want to drop like $300 on a smartwatch that I can’t tinker with, so I was looking into hackable/programmable smartwatches. I found Watchy which looks promising, $50 for basically an assembled PCB, an epaper display, and a strap, but its out of stock. There’s also the Bangle.js but it’s $100, looks nicer and is waterproof (and in stock), but that’s a lot for something that runs someone else’s firmware, and I have to program in Javascript of all things.

So what’s the alternative? Why I’ll just make one, I have a degree in Computer Engineering that I use to do Software engineering, it’s about time I dove back into hardware and PCB design. Watchy is made with the ESP32, that’s a chip that supports Wifi, Bluetooth, and Bluetooth Low Energy (BLE), and I know it comes in a module that has all the RF work done for me, and is already FCC certified.

But of course all the ESP32 modules I can find are entirely out of stock… but the 48-QFN is in stock!

Except that I’m not going to be an idiot and try soldering a 48-QFN this time, last time I tried doing a 28-QFN and I couldn’t tell if I got it or not.

To give an idea of what I’m talking about, here’s the 28 pin QFN package I have that I tried soldering once upon a time. Notice that it is placed on a dime for scale, not a quarter:

I put it upside down so you can see the contacts, those go down onto the PCB, and are not visible from the outside, you have to hot air flow the solder while blind and hope you get it right. Usually these types of joints will be done in a reflow oven (which I don’t have) and verified using Xray to check connections (Which I also don’t have).

Yeah so no way I’m doing a 48 pin QFN any time soon, at least QFPs have the contacts as little legs that stick out so you can inspect them with a microscope (Which I also don’t have, yet.)Plus if I go with the 48-QFN then I have to do the RF board design, design an antenna, hope it works, and spend money on PCB fab right away without even knowing if it’ll work, so that’s not what I’m going to do for this project (this time… I have a bad habit of jumping into PCB design without having prototyped anything, PCB layout is very fun and one of my favorite things in college so I like to go do it if I can.)

Instead I’m looking at dev boards, because sure the ESP32 modules are all out of stock if you want just the module, but I found these ESP32 dev boards on Adafruit which look like just the right thing for testing this stuff out.

They include the ESP32 module I would hope to find back in stock eventually, and all the necessary supporting electronics on it, with a handy micro USB port to program it through, and likely it already has a firmware that allows in circuit programming, also a built in LiPo/Li-Ion charger circuit, and USB to serial (serial usually means UART, that’s how you get a console out of a microcontroller, and how you get bootloader logs out of a SoC. (ie how you debug a phone that doesn’t boot))

So we’re going with an ESP32 module with this datasheet. Reading datasheets is a bit of a skill in itself, and it’s less stressful if I’m using a dev board, because I don’t have to try to decode what circuitry is needed to make the thing go, that’s already done, I just need to look at things like features, pinout, and then also figure out the pinout of the dev board, and from there I can choose what sorts of sensors, gadgets, doohickeys, and devices I can hook up to the thing.

First section you want to check is the overview section, usually there’s a short list of all the fancy features your microcontroller or other component has.

The big hitter features on the ESP32 are the Wifi and Bluetooth features of course, but its also got a 32-bit dual-core on it, clocking at 240MHz, which isn’t a super high speed processor, but I think it should do for a smartwatch.

And like standard microcontrollers, you can do UART, SPI, I2C, PWM, and some other more exotic features. Capactive touch is a cool one on the ESP32, as well as IR. So that’s some cool stuff.The datasheet is all about the hardware itself usually, it will have things like the pinout, electrical characteristics, temperature behavior, the shape and size of the part, safe operating temperatures, voltages, and currents. That sort of thing. But it doesn’t tell you how to actually do anything with it, for that you have to find a different document, which is luckily linked in the ESP32 datasheet, The technical reference manual Sometimes what you’re looking for will also be called a programming guide, user guide, or something else to that effect. There is also a separate User Guide linked to from the datasheet, but we’ll check the tehcnical reference first.

Typically when you’re going after programming a microcontroller you want to try to find what’s called the Register Map or the Address Map. Basically you access all the peripherals through memory addresses, so the way you figure out which part of memory to access for what is to look for the Map that tells you which part is for which things, or sometimes you just go to the section for what you’re looking on how to do and it’ll describe it there.

For example, if I want to figure out how to use the SPI interface, a 4 wire method of talking to other things, just go to the SPI section, and you get a whole bunch of details on timing, interrupts, etc, and then there’s also a handy Register Summary here, which details all the addresses of the registers needed to do SPI stuff, and what the registers do.

This is great, but also note that you may not need to do this in depth reading of the manuals, most of these boards also come with libraries and such for common development environments, so it may not be necessary depending on what board you choose and what you’re doing.

So I’ve got the microcontroller selected, and I can find the features that are available on it very easily, next up is figuring out what to put together with that to make a watch!

So there are a few things needed, like a display and some form of input, but also some other items that would be totally cool, specifically in the sensors category:

- A screen or display, need to choose touchscreen, non-touchscreen, OLED vs Epaper vs TFT vs LCD

- Input/controls

- Buttons

- knobs?

- Sensors/other peripherals

- Motion sensor, typically used for tracking steps, not sure I care about that.

- air quality sensor, CO/CH4 sensor - Feels like a cool feature to have on your watch, being able to detect dangerous gases and such

- temperature, humidity, air pressure sensor - not necessarily the most useful since you can already feel two of these things at least.

- geiger counter/radiation sensor - extra cool factor

- IR blaster - need to replace the one on my dying phone with something else I can have always on me to control the TV volume, I lose remotes too easy or I’m too lazy to cross the room for the remote.

- microSDcard reader? - Not sure on this one yet, seems like it would add cost but not sure there’s much point, depends on how much storage is needed and what all the thing will be doing, and even then I’m not sure if removeable storage makes sense vs just EMMC or something like that.

- LEDs - Not really necessary, although having a bright LED for use as a flashlight could be handy, probably a battery killer though

- Battery

- there are a ton of different kinds of battery chemistry all with pros and cons, so likely I’ll need to research NiCd, NiMh, LiPo, Li-Ion, varieties. Adafruit has a LiPo that is made to go with the dev board I’m getting, so for now I’m just going with that, the 500mAh kind, the dev board already has LiPo/Li-Ion charging and monitoring circuitry and connector. All I do know for now, is I’m going with rechargeable in the final design, and probably need to make sure its not an explode-y kind, granted having a self destruct feature on your wrist does sound cool, it’s not really the kind of thing my wife would prefer I think.

So that’s hardware, but what about software?

So I haven’t done anything with an ESP32 before, so I’m not fully certain on the dual-core nature of it, and how to best program for that, so I’m likely going to be using a pre-built software package, either from some example ESP32 code, or potentially an RTOS (Real Time Operating System).RTOSes are usually used on microcontrollers for safety, mission critical systems, they are preferred for systems like that over a scheduled operating system because RTOSes are deterministic, they will schedule threads in the same priority order over other threads, so while there is the mess of setting the thread priorities, once set you’ll always know that your mission critical threads will take priority over the non-mission critical threads. At my old company the people doing the RTOS dev were separate from the Android and OS/BSP guys, I was one of the Android and OS/BSP guys so I’m not familiar with RTOS development, so this will likely be a fun experiment.

There is likely the question of “Why not run Linux?” as well, I am more familiar with Embedded Linux, especially in the Android ecosystem, than I am with RTOSes, but there is a slight issue here, the dual-core only clocks at 240MHz, I’m not wanting to use up any more of it with a larger OS than is needed, and in addition, the amount of RAM available is measured in KB, I think even the lightest Linux builds want at least a few MB of RAM, in this case we get only 4MB of flash memory, which is the not RAM storage kind, but the “program lives here” kind, so not only would Linux need to have enough RAM, it would need enough storage, which would mean writing just a bootloader and then having that load the Linux kernel from elsewhere, and good luck getting your kernel to fit into KB of memory.

So that’s it for this long-ass adventure into hardware and making a replacement watch. Hopefully I won’t stay distracted from my roguelike for long, and I can get an order in with the dev board so I can start playing around.

If anyone has any ideas on how to find a good 1.5" to 2.5" display, let me know. I’m likely to get a display module I guess but they run like $20 for a 1.5 inch display, and come with an sdcard and other crap. I’m starting to look into AliExpress but that’ll take like a month to ship to me if not longer, granted it looks like you can get what I need for like $6

Next time I hope to be back on the roguelike, and/or have some sort of hardware to show off!

-

Well here we are, the end of November. I 1000% got distracted by other things instead of working on the roguelike for the crunchless challenge thing I was trying to do. But hey I didn’t crunch on anything so i succeeded on the crunchless part of it! I just didn’t do much of anything programming or game dev related.

My 3d printer came in and I got distracted and now have another new project: I’m making a redox split keyboard with Kailh box jade switches. Parts are all on order and arrive in January, printing the case and the keycaps myself, so far the two test keycaps I made feel okay to me, but I don’t have any other box switches to test fit them with so I need to wait until january when they arrive to test fit them to make sure that they will work.

Back to the Game engine project

Yeah I went back to working on my game engine project again. Still trying to get scene saving/loading working, still using json for that, and currently bored of writing save/load logic so will likely keep saving json until either I run into it taking up too much memory/space, or it takes too long to load when needed. I think lazy route is best for getting anything actually finished/shipped.

So basically I’m stuck on writing out how to convert a bunch of different components that an entity might have to JSON, and then I eventually need to write the code to load all that back in too, which I don’t think is even started yet.

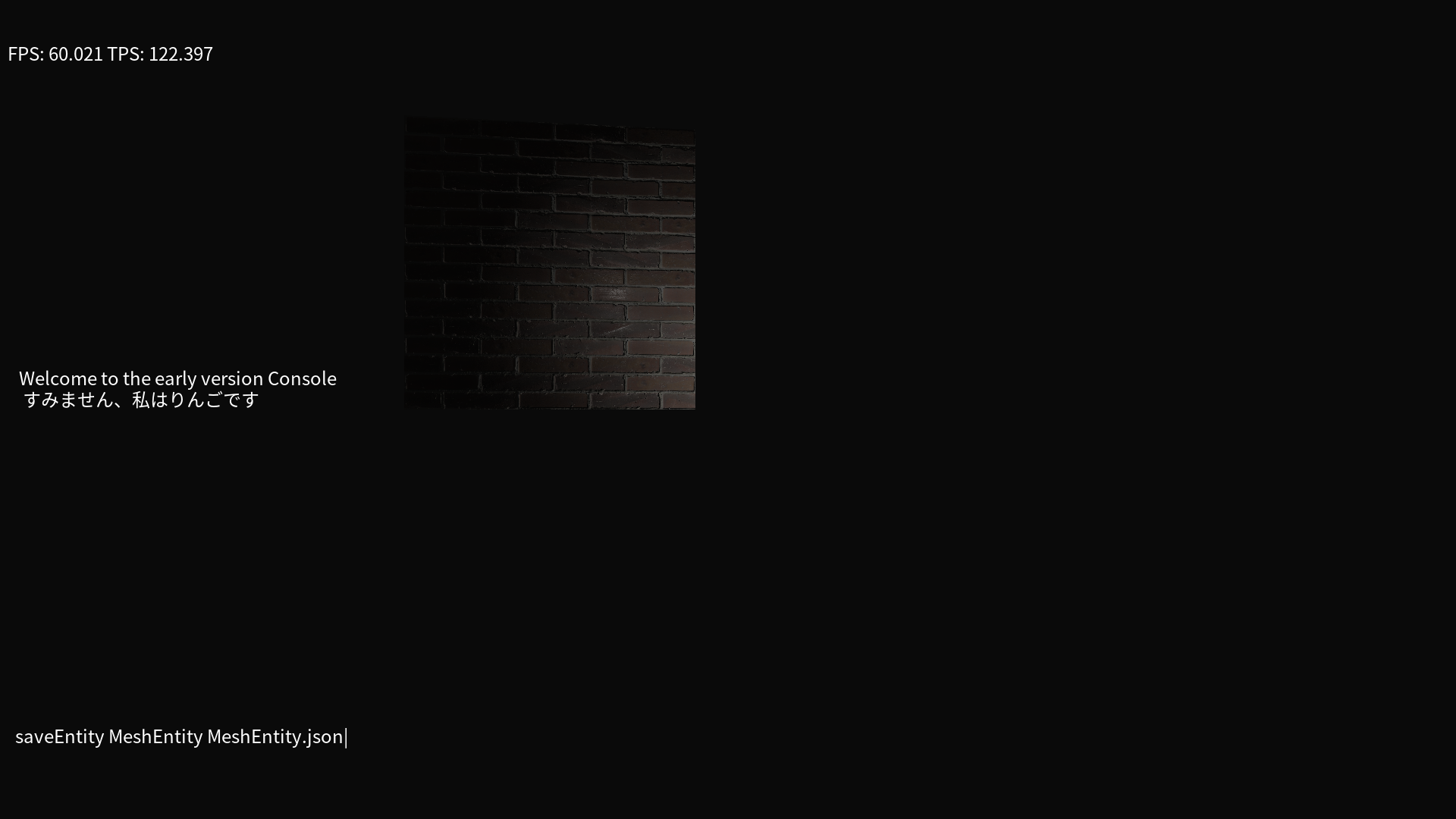

But it has been a while since I provided screenshots, and I was super close to getting json output actually, so here have some screenshots of me showing me typing a console command to save an entity, and then let me show some json that was generated from the Entity that was saved.

Screenshot first:

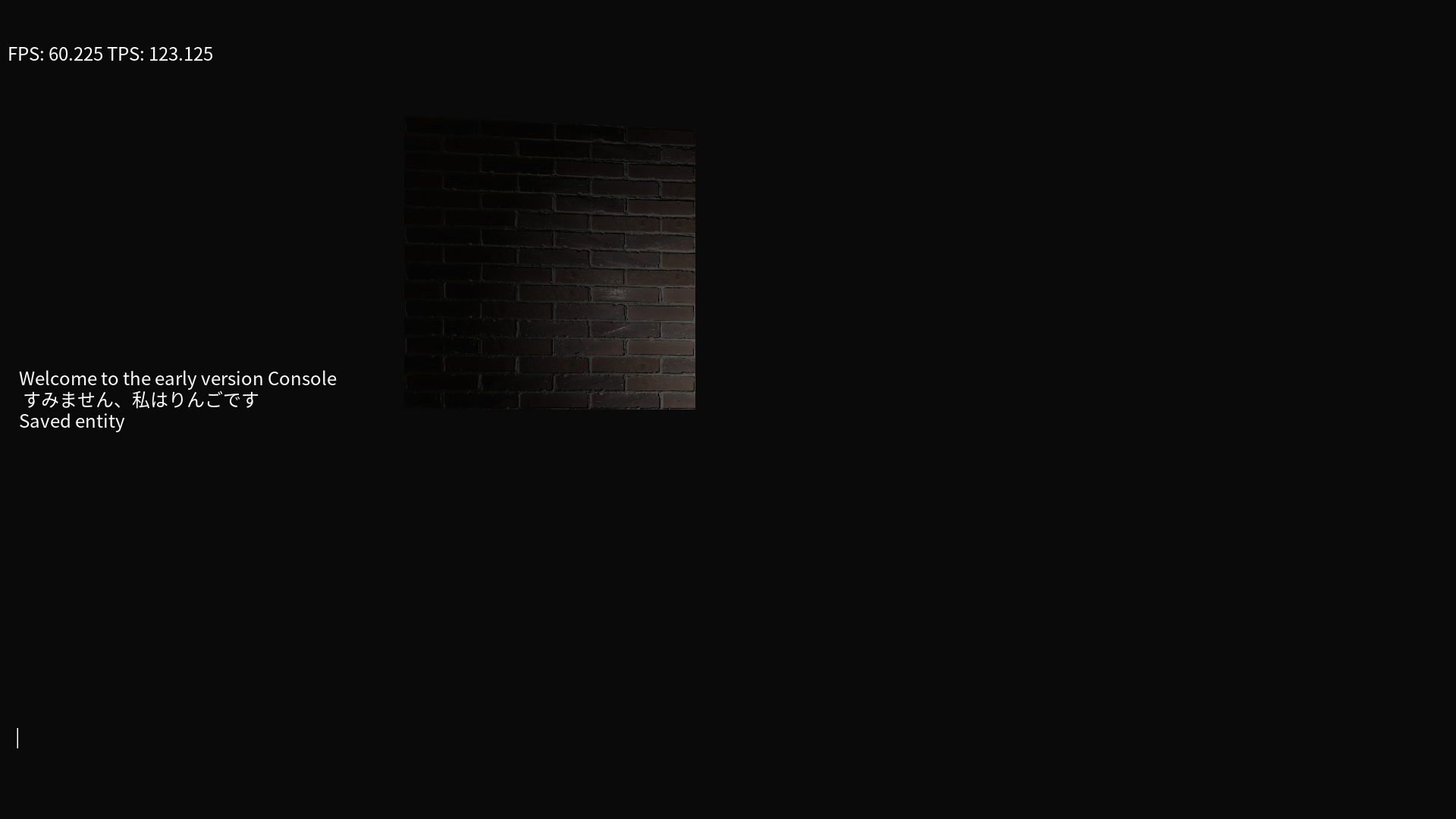

And a screenshot showing the feedback you get from running the command, I know it’s not much feedback but its something:

And now check out the json generated for the cube you see in the screenshot, in a file called “MeshEntity.json”

{"EcsName":{"value":"MeshEntity"},"TransformComponent":{"position":{"x":0,"y ":0,"z":0},"rotation":{"w":0,"x":0,"y":0,"z":0},"scale":1},"VisibleComponent ":{"opaque":true,"visible":true}}I haven’t fully figured out how I want to save Mesh components yet, part of the issue is with this specific case, I don’t have a RUID/path to just store to a model, that mesh is generated in Lua, which means that if I wanted to store it I would likely have to parse/store out the entire freaking mesh into the json, including vert data, face data, texcoord data, and maybe vert color data, and texture data somehow since the texture is handled by being a material set to the mesh.

So I’m so close yet so far. I’m hoping that if I set up model loading from files, that I can make it easier to save that out to json, and then either just won’t save programmically generated meshes, or something. idk yet.

I also have a few other components not implemented yet, and loading hasn’t been touched at all, neither is saving the children of the entity selected to save. But still this is good progress!

-

Guess I’m not good at doing these posts weekly anymore so I’ll jump in here again even though I’m not where I wish I was.

I have entity saving working for saving out the children of entities now! fixed some bugs with getting the list of children from the Lua code!

Working on getting the loading done now, and it’s as boring/painful a slog as saving was but worse somehow. (I didn’t write any helpers, I’m writing crap out longform like

picojson::valueandstd::map<std::string,picojson::value>and thenvalue.get<std::map<std::string,picojson::value>>()kind of things.

For each time I need to get an object out. and then more crap for getting values from the object.Needless to say I’m not super highly confident/happy with it but hey it’ll work once I slog it all out… Just adding saving/loading new components will be some major pain I think.

I was just going to go get some screen shots of some commands and such, and found a new bug. so that’s exciting.

I added an entity

Entity01then addedEntity02as a child ofEntity01then addedEntity03as a child ofEntity02and then tried to get the children ofEntity02and got this:12-20-2021 11:43:03 | I LUASCRIPT: ayyy we got a command: 'getChildrenOf Entity02' (null):19: error: unresolved component identifier 'Entity02' CHILDOF | Entity02 ^ Aborted (core dumped)So that’s so wonderful. it’s an error from the ECS library I use called flecs I think, but not sure if its a bug in how I did the query writing, or if it’s a bug in the library, or what.

Starting to think I might need to either upgrade the flecs version, switch to a different ECS library, or write my own since I keep having issues with it that are somewhat outside of my control.

Starting to think that ECSes aren’t that great if you’ only really using the E and C parts of them and not the Systems part. having all my logic outside of the systems has been a bit of a struggle with the library asserting on me for various reasons.

It might also not help that I’m on like version 2.2.0 but there’s since been a 2.3.0, and now the latest is like 2.4.8 or something, so there have been a lot of patch releases since I last updated. I’d already update but it’s hard to find all the changelog/patch notes and there are basically breaking changes with nearly every update.

So I’m actually going to go completely off tangent here from how I started writing this post (because I’m too lazy to delete all that I already wrote and start over), i originally meant to show off some new loading/saving crap I did. Instead I’m going to bring up alternative ECS solutions, and then I think I’ll just pick one and say I’m switching to it and go with that.

I’m currently using Flecs a ECS system that is for C and C++ and is supposedly fast and all that crap. I don’t remember how I chose it, I know my requirements for chosing one were basically easy to integrate, fast enough, and works.

Flecs is basically a c file and a header file, so it checked the easy to integrate part. and the documentation was fairly solid in terms of having examples and stuff on how to use it. The problem is every time it updates it has breaking changes, and I keep running into threading issues, or issues where I add entities outside of it, and it doesn’t seem super thread safe.

I went and looked back at a different ECS library I saw before but didn’t go with, called EnTT which is supposedly the “fastest” and used by minecraft. I don’t care about the fastest, but looking at it again, what caught my eye was in the integration section, it actually lets you just include only the Entity and Component part. Which seems to be literally the only part I need/use right now.

EnTT is also single header only, and all that, so now I’m of the mind of basically branching my engine, swapping the ECS library to EnTT, and then seeing how that works instead.

For obvious reasons of the fact that I’m lazy, I didn’t bother wrapping the ECS library in any way shape or form, because it’s so vital/tied in to everything in the engine I didn’t plan on ever needing to change the library.

I may yet decide I need to wrap it this time, I’m not sure. if I wrap it that will help me to switch back and forth between ECS libraries and possibly help me to upgrade from one version to another, so that does seem like a good idea.

In order to pull off the switch to EnTT I will also need to modify basically the entirety of my engine. I need to change the lua binding code to use the new methods of adding/removing/modifying entities and components, I need to change the update thread where it pulls out the entities that get rendered to pass them to the render thread. I need to update the saving/loading stuff already and its not even done yet. And maybe more. Definitely will be quite a project so wrapping the ECS seems like a logical thing to do in case I need to do this again in the future, and I suspect it can be done without tanking performance as well, either via macros or via inlining things.

Well that’s it for now, I guess I’ll post again in a few months when I’ve got the new ECS library in

-

So switching over to EnTT actually wasn’t as painful as I was expecting. I’m not quite fully at the same features as it was on flecs, and the saving/loading is entirely nuked, but so far the rendering and all that crap is mostly working on EnTT, really only took a couple sessions of sitting down and replacing all the flecs includes with entt and then seeing what broke.

I did have an issue with EnTT asserting when removing and entity, but I suspect I just need to check that my threads aren’t screwing themselves up and make sure all is deleted in a “single” thread.

Also a few differences I’ve noticed between EnTT and Flecs:

- There are no built in Entity names in EnTT, meaning there’s no built in name lookup.

- Easily fixed by keeping a map of strings to entities myself, only issue is the difficulty of going from entity to name, so might need a component too? not too difficult since that’s how it was done on Flecs, there was a EcsName component that held the name.

- There is no built in hierarchy mechanism in EnTT.

- This means that I’ll need to roll my own, but that’s not honestly too painful, I’ve done data structures stuff before and there are some examples of structures/how to store it.

Also funny thing, there is an example of serializing the tree, and it uses a library I hadn’t heard of before called Cereal, which by the looks of it, makes serializing stuff to various formats fairly trivial. So I’m likely going to be redoing all the saving/loading stuff with that, which is great because the save/load isn’t working too well anyways.

Next steps are:

- Figure out the remaining issues with getting Entity names and how to store that (likely adding a component for it, which I already was going to do with Flecs but then found they had their own, so now I just need to add mine back and use it.

- Figure out how to do a hierarchy, or if I don’t need to do that at all

- Likely want to still do it, makes adding/deleting large chunks of scene much easier. For Example if I want to load in a room, and then delete that room, I can just load in the root and automatically load the children, and then just delete the root (From Lua, I have to traverse the trees in C++) and the rest can be deleted too.

- Add in the saving/loading stuff with Cereal, there is actually a bunch of examples on how to save snapshots of the entity component system with Cereal, but I’m not sure if I’ll want to do that, or if I’ll go with my previous layout. My previous plan was basically to make it so you would call save on the root node in Lua, and then the C++ binding for that would also save all the children of that entity, so your workflow would consist of basically having a root node for each major thing you want to break the scene into logically, and then that would let you save/remove/load that whole chunk at once.

I’m not concerned about the extra effort of handling the tree traversal myself, I can do that in C++ and I expect all logic to be done in Lua, so from the standpoint of using my engine, it will be just as usable as if it was handled by the ECS library, just in this case I have the power to define the hierarchy tree myself, which could have advantages as well.

I also found that the getting/modifying components for an entity is a bit different than I expected/how it was on Flecs. I expected to be able to basically get a reference to the component and then modify that, however I found that the way I was doing that at least did not modify the values on the entity, so I had to use a separate method to “patch” the component.

- There are no built in Entity names in EnTT, meaning there’s no built in name lookup.